Why we built an AI supercomputer in the cloud

Introducing Vela, IBM’s first AI-optimized, cloud-native supercomputer.

AI models are increasingly pervading every aspect of our lives and work. With each passing year, more complex models, new techniques, and new use cases require more compute power to meet the growing demand for AI.

One of the most pertinent recent examples has been the advent of foundation models, AI models trained on a broad set of unlabeled data that can be used for many different tasks — with minimal fine-tuning. But these sorts of models are massive, in some cases exceeding billions of parameters. To train models at this scale, you need supercomputers, systems comprised of many powerful compute elements working together to solve big problems with high performance.

Traditionally, building a supercomputer has meant bare metal nodes, high-performance networking hardware (like InfiniBand, Omnipath, and Slingshot), parallel file systems, and other items usually associated with high-performance computing (HPC). But traditional supercomputers weren’t designed for AI; they were designed to perform well on modeling or simulation tasks, like those defined by the US national laboratories, or other customers looking to fulfill a certain need.

While these systems do perform well for AI, and many “AI supercomputers” (such as the one built for OpenAI) continue to follow this pattern, the traditional design point has historically driven technology choices that increase cost and limit deployment flexibility. We’ve recently been asking ourselves: what system would we design if we were exclusively focused on large-scale AI?

This led us to build IBM’s first AI-optimized, cloud-native supercomputer, Vela. It has been online since May of 2022, housed within IBM Cloud, and is currently just for use by the IBM Research community. The choices we’ve made with this design give us the flexibility to scale up at will and readily deploy similar infrastructure into any IBM Cloud data center across the globe. Vela is now our go-to environment for IBM Researchers creating our most advanced AI capabilities, including our work on foundation models and is where we collaborate with partners to train models of many kinds.

IBM has deep roots in the world of supercomputing, having designed generations of top-performing systems ranked in the world’s top 500 lists. This includes Summit and Sierra, some of the most powerful supercomputers in the world today. With each system we design, we discover new ways to improve performance, resiliency, and cost for workloads of interest, increase researcher productivity, and better align with the needs of our customers and partners.

Last year, we set out with the goal of compressing the time to build and deploy world-class AI models to the greatest extent possible. This seemingly simple goal kicked off a healthy internal debate: Do we build our system on-premises, using the traditional supercomputing model, or do we build this system into the cloud, in essence building a supercomputer that is also a cloud. In the latter model, we might compromise a bit on performance, but we would gain considerably in productivity. In the cloud, we configure all the resources we need through software, use a robust and established API interface, and gain access to a broader ecosystem of services to integrate with. We can leverage data sets residing on IBM’s Cloud Object Store instead of building our own storage back end. We can leverage IBM Cloud’s VPC capability to collaborate with partners using advanced security practices. The list of potential advantages for our productivity went on and on. As the debate unfolded, it became clear that we needed to build a cloud-native AI supercomputer. Here’s how we did it.

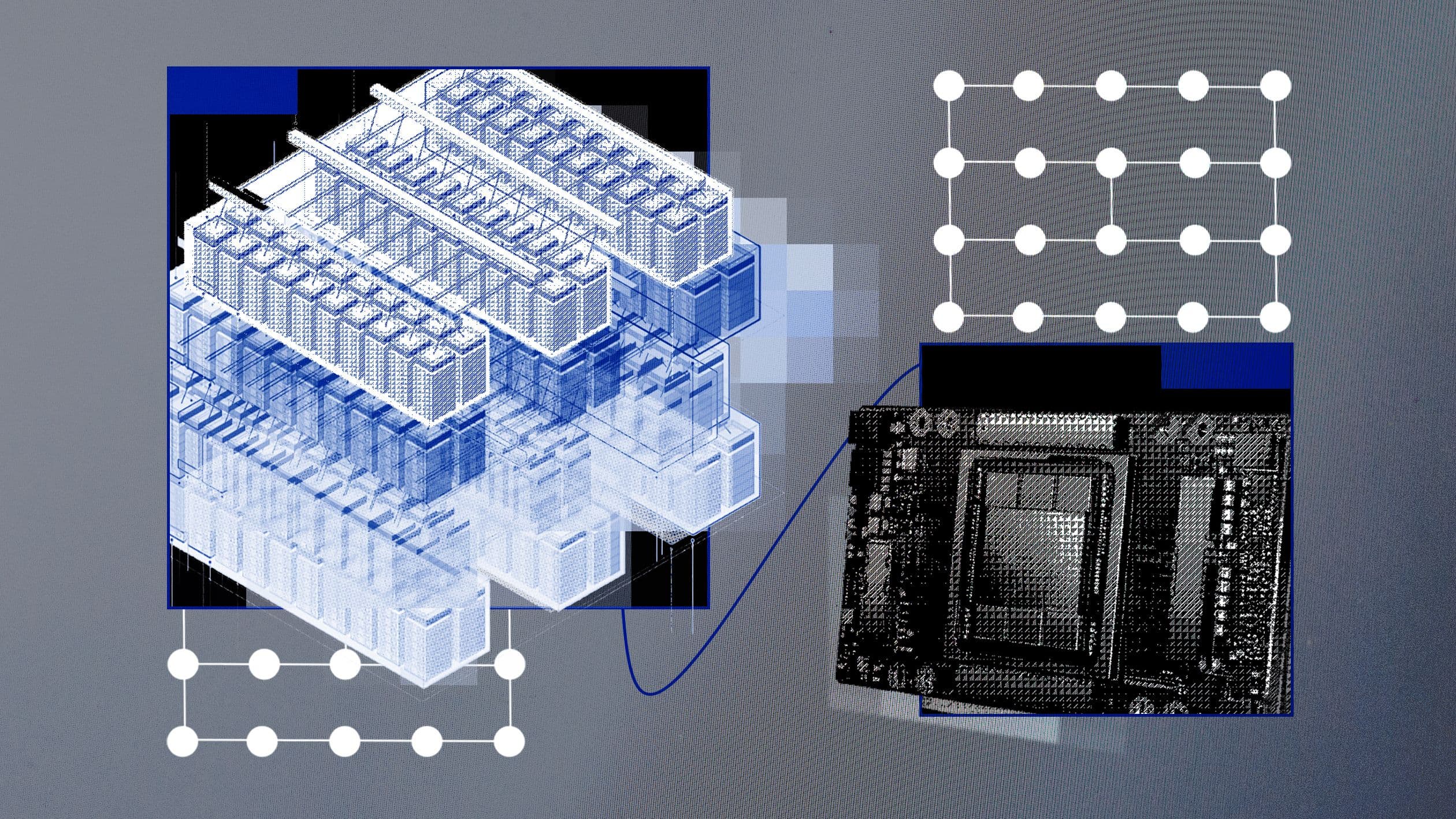

When it comes to AI-centric infrastructure, one intransigent requirement is the need for nodes with many GPUs, or AI accelerators. To configure those nodes, we had two choices: either make each node provisionable as bare metal, or enable configuration of the node as a virtual machine (VM).1 It’s generally accepted that bare metal is the path to maximizing AI performance, but VMs provide more flexibility. Going the VM route would enable our service teams to provision and re-provision the infrastructure with different software stacks required by different AI users. We knew, for example, that when this system came online some of our researchers were using HPC software and schedulers, like Spectrum LSF. We also knew that many researchers had migrated to our cloud-native software stack, based on OpenShift. VMs would make it easy for our support team to flexibly scale AI clusters dynamically and shift resources between workloads of various kinds in a matter of minutes. A comparison of the traditional HPC software stack and Cloud-native AI stack are shown in Figure 1. But the downside of cloud native stack with virtualization, historically, is that it reduces node performance.

So, we asked ourselves: how do we deliver bare-metal performance inside of a VM? Following a significant amount of research and discovery, we devised a way to expose all of the capabilities on the node (GPUs, CPUs, networking, and storage) into the VM so that the virtualization overhead is less than 5%, which is the lowest overhead in the industry that we’re aware of. This work includes configuring the bare-metal host for virtualization with support for Virtual Machine Extensions (VMX), single-root IO virtualization (SR-IOV), and huge pages. We also needed to faithfully represent all devices and their connectivity inside the VM, such as which network cards are connected to which CPUs and GPUs, how GPUs are connected to the CPU sockets, and how GPUs are connected to each other. These, along with other hardware and software configurations, enabled our system to achieve close to bare metal performance.

A second important choice was on the design of the AI node. Given the desire to use Vela to train large models, we opted for large GPU memory (80 GB), and a significant amount of memory and local storage on the node (1.5TB of DRAM, and four 3.2TB NVMe drives). We anticipated that large memory and storage configurations would be important for caching AI training data, models, other related artifacts, and feeding the GPUs with data to keep them busy.

A third important dimension affecting the system’s performance is its network design. Given our desire to operate Vela as part of a cloud, building a separate Infiniband-like network — just for this system — would defeat the purpose of the exercise. We needed to stick to standard ethernet-based networking that typically gets deployed in a cloud. But traditional supercomputing wisdom states that you need a highly specialized network. The question therefore became: what do we need to do to prevent our standard, ethernet-based network from becoming a significant bottleneck?

We got started by simply enabling SR-IOV for our network interface cards on each node, thereby exposing each 100G link directly into the VMs via virtual functions. In doing so, we also were able to use all of IBM Cloud’s VPC network capabilities such as security groups, network access control lists, custom routes, private access to PaaS services of IBM Cloud, access to Direct Link and Transit Gateway services.

The results we recently published with PyTorch showed that by optimizing the workload communication patterns, controllable at the PyTorch level, we can hide the communication time over the network behind compute time occurring on the GPUs. This approach is aided by our choice of GPUs with 80GB of memory (discussed above), which allows us to use bigger batch sizes (compared to the 40 GB model), and leverage the Fully Shared Data Parallel (FSDP) training strategy more efficiently. In this way, we can efficiently use our GPUs in distributed training runs with efficiencies of up to 90% and beyond for models with 10+ billion parameters. Next we’ll be rolling out an implementation of remote direct memory access (RDMA) over converged ethernet (RoCE) at scale and GPU Direct RDMA (GDR), to deliver the performance benefits of RDMA and GDR while minimizing adverse impact to other traffic. Our lab measurements indicate that this will cut latency in half.

Each of Vela’s nodes has eight 80GB A100 GPUs, which are connected to each other by NVLink and NVSwitch. In addition, each node has two 2nd Generation Intel Xeon Scalable processors (Cascade Lake), 1.5TB of DRAM, and four 3.2TB NVMe drives. To support distributed training, the compute nodes are connected via multiple 100G network interfaces that are connected in a two-level Clos structure with no oversubscription. To support high availability, we built redundancy into the system: Each port of the network interface card (NIC) is connected to a different top-of-rack (TOR) switch, and each TOR switch is connected via two 100G links to four spine switches providing 1.6TB cross rack bandwidth and ensures that the system can continue to operate despite failures of any given NIC, TOR, or spine switch. Multiple microbenchmarks including iperf and NVIDIA Collective Communication Library (NCCL), show that the applications can drive close to the line rate for node-to-node TCP communication.

While this work was done with an eye towards delivering performance and flexibility for large-scale AI workloads, the infrastructure was designed to be deployable in any of our worldwide data centers at any scale. It is also natively integrated into IBM Cloud’s VPC environment, meaning that the AI workloads can use any of the more than 200 IBM Cloud services currently available. While the work was done in the context of a public cloud, the architecture could also be adopted for on-premises AI system design.

Having the right tools and infrastructure is a critical ingredient for R&D productivity. Many teams choose to follow the “tried and true” path of building traditional supercomputers for AI. While there is clearly nothing wrong with this approach, we’ve been working on a better solution that provides the dual benefits of high-performance computing and high end-user productivity, enabled by a hybrid cloud development experience. 2 Vela has been online since May 2022 and is in productive use by dozens of AI researchers at IBM Research, who are training models with tens of billions of parameters. We’re looking forward to sharing more about upcoming improvements to both end-user productivity and performance, enabled by emerging systems and software innovations.2 We are also excited about the opportunities that will be enabled by our AI-optimized processor, the IBM AIU, and will be sharing more about this in future communications. The era of cloud-native AI supercomputing has only just begun. If you are considering building an AI system or want to know more, please contact us.

References

-

How to Deploy a High-Performance Distributed AI Training Cluster with NVIDIA A100 GPUs and KVM, at GTC 2022 ↩

-

Seetharami Seelam, Keynote Talk - Hardware-Middleware System co-design for foundation models, at the 23rd ACM/IFIP International Middleware Conference, Quebec City, Quebec, Canada. ↩ ↩2

Related posts

- ResearchKim Martineau

Lossless compression tailored for AI

ResearchKim MartineauIBM Research Ireland moves to the heart of Dublin with a new lab at Trinity College Dublin

NewsMike MurphyIBM Granite Vision tops the chart for small models in document understanding

NewsMike Murphy