Securing AI systems with adversarial robustness

AI workflows running in the real world can be vulnerable to adversarial attacks. We’re working to help them resist hacks, rooting out weaknesses, anticipating new strategies, and designing robust models that perform as well in the wild as they do in a sandbox. In short, we need to make AI hack-proof.

AI workflows running in the real world can be vulnerable to adversarial attacks. We’re working to help them resist hacks, rooting out weaknesses, anticipating new strategies, and designing robust models that perform as well in the wild as they do in a sandbox. In short, we need to make AI hack-proof.

In recent years, there’s been an explosion of AI datasets and models that are impacting millions around the world each day. Some systems are recommending us songs and movies to enjoy; others are automating fundamental business processes or saving lives by detecting tumors. In the near future, machines relying on AI will drive our cars, fly our aircraft, and manage our care. But for that to take place, we need to ensure that those systems are robust enough against any attempts to hack them.

During development of an AI model, conditions are carefully controlled to obtain the best possible performance — like starting a seedling in a greenhouse. But in the real world, where models are ultimately deployed, conditions are rarely perfect, and risks are abundant. If development is a greenhouse, then deployment is a jungle. We have to prepare AI models to withstand the onslaught.

My colleagues and I are pursuing this objective on several fronts, reflected in many of our papers at the 2021 Neural Information Processing Systems (NeurIPS) conference this month. This active field of research, known as adversarial machine learning, aims to bridge the gap between development and deployment of AI models, making them robust to adversity.

In the real world, AI models can encounter both incidental adversity, such as when data becomes corrupted, and intentional adversity, such as when hackers actively sabotage them. Both can mislead a model into delivering incorrect predictions or results. Adversarial robustness refers to a model’s ability to resist being fooled. Our recent work looks to improve the adversarial robustness of AI models, making them more impervious to irregularities and attacks. We’re focused on figuring out where AI is vulnerable, exposing new threats, and shoring up machine learning techniques to weather a crisis.

Knowing your weakness is as important as knowing your strength. We’re seeking out the soft spots in popular machine learning techniques so we can begin to defend against them.

Poisoned data

One of the most pertinent threats to AI systems is the potential for their training data to be poisoned. Perhaps a malicious actor accesses the training data and injects information that will cause the AI model to act in a way that the developers wouldn’t expect.

Unsupervised domain adaptation (UDA) is an approach to generalization in machine learning where knowledge is transferred from a labeled source domain to an unlabeled target domain with a different data distribution. UDA is useful in cases where obtaining large-scale, well-curated datasets is both time consuming and costly. Many UDA algorithms work by respecting an upper bound for the error on the source domain and minimizing the divergence between the data distributions of the two domains. Despite success on benchmark datasets, these methods fail in certain scenarios, and we show that this failure1 is due to the lack of a lower bound for the error on the source domain. This tells us that UDA methods are sensitive to data distributions, which makes them vulnerable to adversarial attacks such as data poisoning.

To concretely understand the extent of this vulnerability, we evaluate the effects of novel data poisoning attacks using clean-label and mislabeled data on the performance of popular UDA methods. Their accuracy in the target domain drops to almost 0% in some cases with just 10% poisoned data. This dramatic failure demonstrates the limits of UDA methods. It also shows that poisoning attacks can provide insights into the robustness of UDA methods and should be considered when evaluating them as components of an AI system.

Weight perturbation

Poisoning perturbs the input to an AI model, but it is not the only way to attack. The variable “weight” describes the parameters associated with a machine learning model that can be perturbed to influence the output of a model. Sensitivity to weight perturbations has practical implications for many aspects of machine learning, including model compression, generalization gap assessment, and adversarial robustness. In adversarial robustness and security, weight sensitivity can be used as a vulnerability for fault injection and causing erroneous prediction.

We provide the first formal analysis2 of the robustness and generalization of neural networks against weight perturbations. We formulated algorithms that describe the behavior of neural networks in response to weight perturbation. These terms can be incorporated when training neural networks to balance accuracy, robustness, and generalization in the context of weight perturbation.

The best defense is a good offense: We’re predicting how an adversary might strike an AI model to get a head-start on neutralizing the threat.

Reverse-engineering to recover private data

Vertical federated learning (VFL) is an emerging machine learning framework in which a model is trained using data from different owners about the same set of subjects. For example, using information from different hospitals about the same patients during training, while keeping the actual data private, could produce richer insights. To protect data privacy, only the model parameters and their gradients (i.e., how they change) are exchanged during training. But what if private data could be “recovered” from gradients during VFL? This could result in catastrophic data leakage — and we show that this potential threat is huge.

We developed an attack method called CAFE3 that recovers private data with higher quality than existing methods can, including data from large batches, previously thought to resist such attacks. Our results reveal unprecedented risk for data leakage during VFL.

As a practical countermeasure to mitigate CAFE, we propose exchanging fake gradients rather than true ones during training. As long as the difference between the fake and true gradients is below a certain threshold, the fake gradients can achieve the same learning performance as the true ones. These findings should be helpful to developers in securing VFL frameworks, particularly in sensitive applications like healthcare.

Turning the tables on the defense

Adversarial training, a state-of-the-art approach in defending against adversarial attacks, is a specific form of Min-Max training, or training that aims to minimize the maximum adversarial loss. We show that a generalized Min-Max approach can be used conversely to develop more effective adversarial attacks4 by reframing the task as minimizing the performance impact of a given model or defense strategy on the adversary. Our generalized Min-Max approach was better than existing methods at misleading AI models in three different adversarial scenarios, including developing an attack that works against data transformations (such as rotation, lightening, and translation), which are often used as defense strategies.

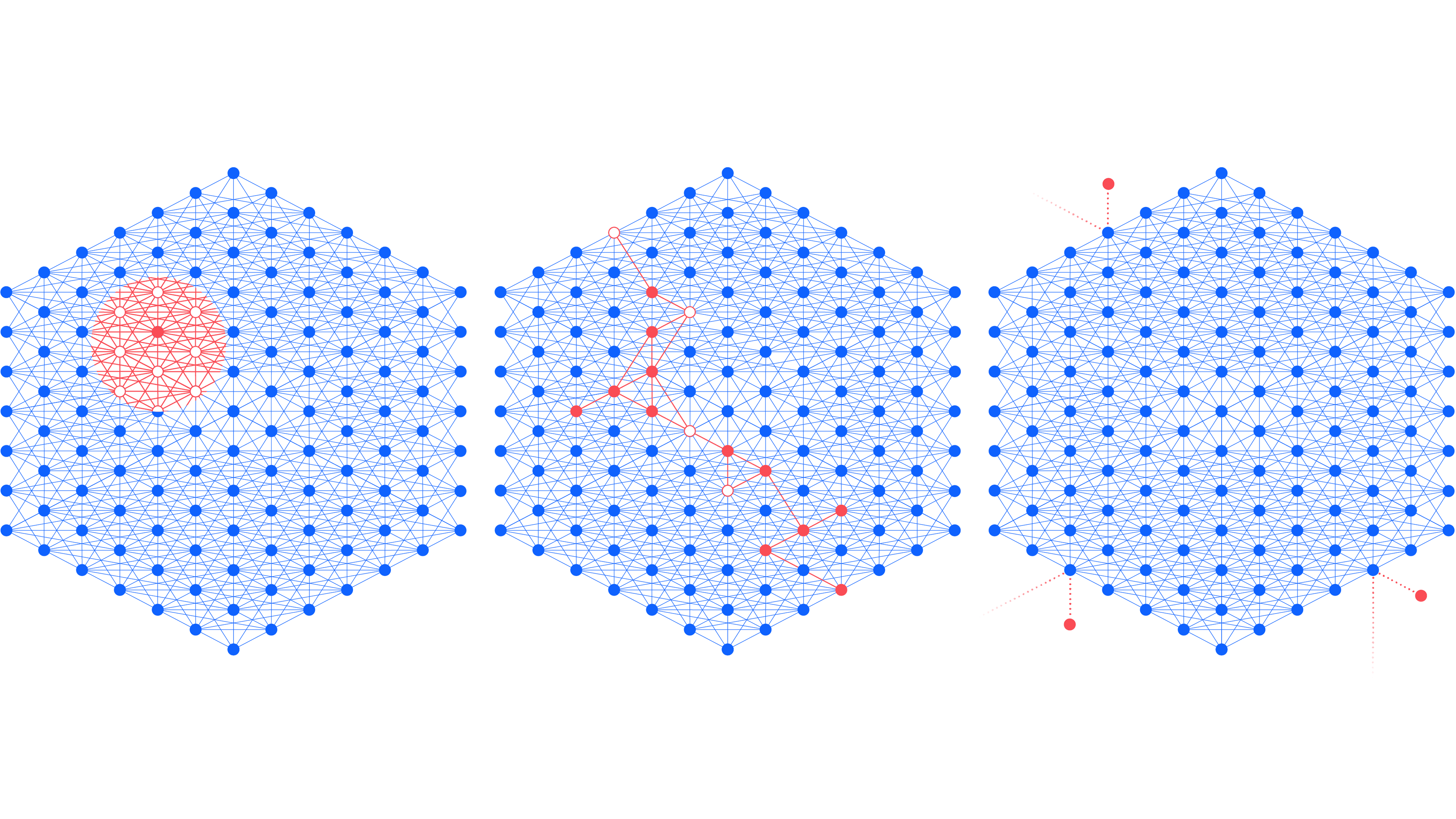

We developed this generalized Min-Max framework by introducing “domain weights” that are maximized over the probability distribution between a set of domains, defined by the specific scenario. In the scenario above, for instance, the domains are the data transformations; in other scenarios, they may be different AI models or different data samples. The domain weights provide a holistic tool to assess the difficulty of attacking a given domain (model, sample, etc.), or its robustness.

Finally, we translate the generalized Min-Max framework to a defense setting and train a model to minimize adversarial loss in a worst-case environment in the face of multiple adversarial attacks. Our approach achieves superior performance in this setting, as well as interpretability from the domain weights, which can help developers choose AI system components and inputs that are robust to the attacks it is likely to encounter.

An ounce of prevention is worth a pound of cure: We’re baking robustness into commonly used techniques with new algorithms.

Preserving robustness during contrastive learning

Contrastive learning (CL) is a machine learning technique where a model learns the general features of a dataset without labels by recognizing data points that are similar or different (contrasting). Learning without relying on labels is called self-supervised learning and is quite useful, given that much of the data in the real world doesn’t have labels. Self-supervision enables robust pre-training, but robustness is often not transferred downstream during fine-tuning a model for a specific task. We explore how to enhance robustness transfer from pre-training to fine-tuning by using adversarial training (AT). Our ultimate goal is to enable simple fine-tuning with transferred robustness for different downstream tasks from an adversarially robust CL model.

AT is generally used during supervised learning, as it requires labeled training data. We eliminate the prerequisite for labeled data — and improve model robustness without loss of model accuracy or fine-tuning efficiency — with a new adversarial CL framework, Adversarial CL (AdvCL5). It outperforms the state-of-the-art self-supervised robust learning methods across multiple datasets and fine-tuning schemes. The framework includes two main components. First, we improve the model’s selection of how to view the data by focusing on high-frequency components, which benefit robust representation learning and pre-training generalization ability. Second, we integrate a supervision stimulus by generating pseudo-labels for the data via feature clustering, which improves cross-task robustness transferability. Approaches like ADV CL bring adversarial robustness to CL-based models.

Dealing with contaminated data

Contaminated best arm identification (CBAI) is an approach to selecting the best of several options (“arms”) under the assumption that the data is vulnerable to adversarial corruption, a scenario that has practical implications. For instance, in a recommendation system that aims to suggest the most interesting articles to users based on their previous recommendations, some of the recommendations are probably imprecise or even malevolent. These “contaminated” samples hinder identification of the true best arm — the one with the best outcome or highest mean reward.

We propose two algorithms for CBAI6, one based on reducing the overlap among the confidence intervals for different arms and the other on successive elimination of suboptimal arms. The algorithms involve estimates of the mean reward that achieve the least deviation from the true mean, using as few samples as possible. We provide decision rules for dynamically selecting arms over time, estimating the mean for each arm, stopping the arm-selection process, and finally identifying the true best arm. Both algorithms can identify the best arm, even when a fraction of the samples are contaminated, and outperform existing methods for CBAI on both synthetic and real-world datasets (such as content recommendations). By ensuring high performance even when dealing with contaminated data, these algorithms can enhance the adversarial robustness of real-world AI models.

For years, AI models struggled to reach accuracy levels suitable for real-world applications. Now that they’ve reached that threshold for certain tasks, it is crucial to recognize that accuracy isn’t the only benchmark that matters. In the real world, fairness, interpretability, and robustness are critical, and many tools are available to inspect these dimensions of AI models. Developers must actively prepare AI models to succeed in the wild by spotting holes in the armor, predicting an adversary’s next move, and weaving robustness into the fabric of AI.

Adversarial Robustness and Privacy: We’re making tools to protect AI and certify its robustness, including quantifying the vulnerability of neural networks and designing new attacks to make better defenses.

References

-

Mehra, A., Kailkhura, B., Chen, P. & Hamm, J. Understanding the Limits of Unsupervised Domain Adaptation via Data Poisoning. NeurIPS (2021). ↩

-

Tsai, Y., Hsu, C., Yu, C. & Chen, P. Formalizing Generalization and Adversarial Robustness of Neural Networks to Weight Perturbations. NeurIPS (2021). ↩

-

Jin, X., Chen, P., Hsu, C., Yu, C. & Chen, T. Catastrophic Data Leakage in Vertical Federated Learning. NeurIPS (2021). ↩

-

Wang, J., Zhang, T., Liu, S., Chen, P., Xu, J., Fardad, M. & Li, B. Adversarial Attack Generation Empowered by Min-Max Optimization. NeurIPS (2021). ↩

-

Fan, L., Liu, S., Chen, P., Zhang, G. & Gan, C. When does Contrastive Learning Preserve Adversarial Robustness from Pretraining to Finetuning? NeurIPS (2021). ↩

-

Mukherjee, A., Tajer, A., Chen, P. & Das, P. Mean-based Best Arm Identification in Stochastic Bandits under Reward Contamination. NeurIPS (2021). ↩