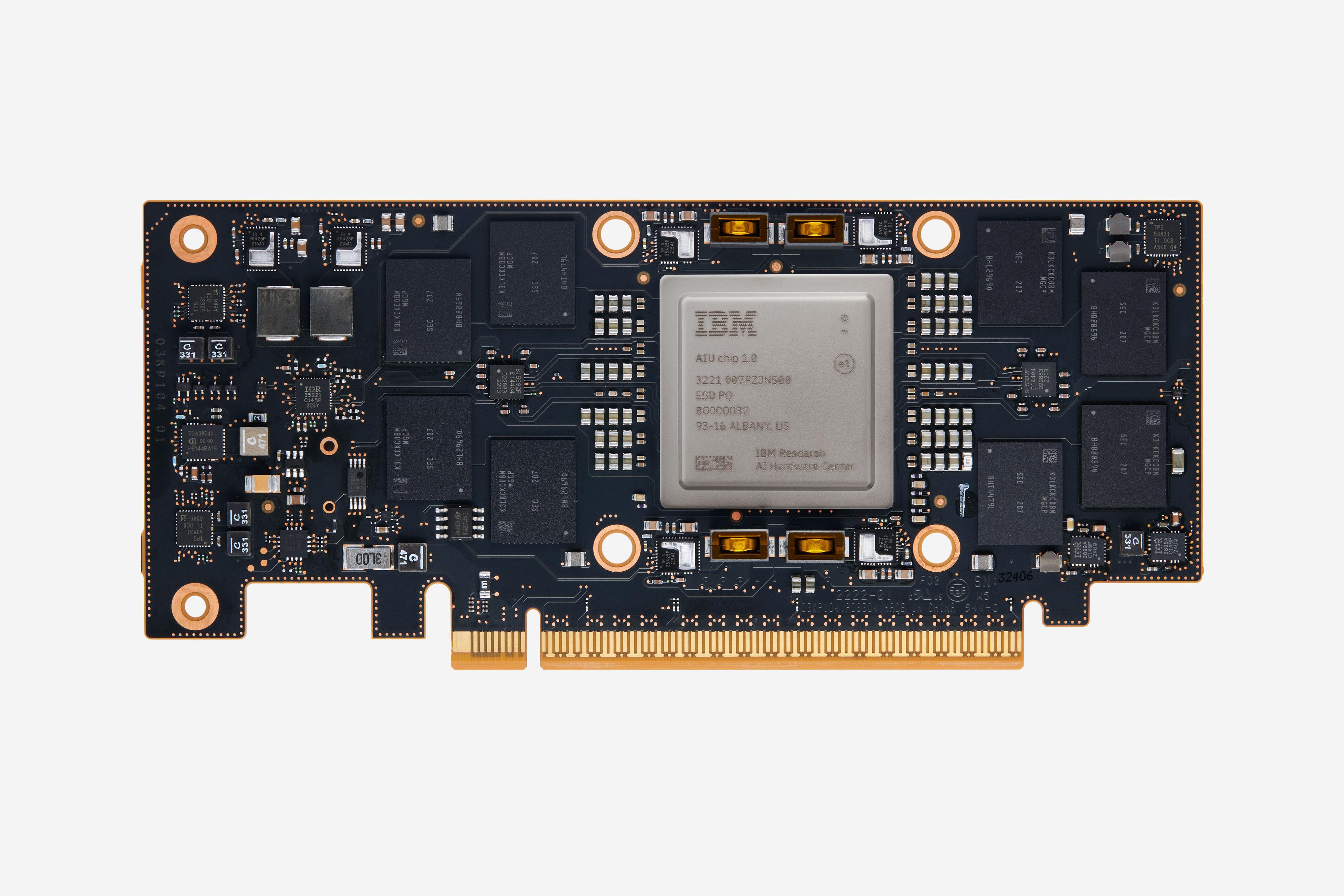

Meet the IBM Artificial Intelligence Unit

It’s our first complete system-on-chip designed to run and train deep learning models faster and more efficiently than a general-purpose CPU.

It’s our first complete system-on-chip designed to run and train deep learning models faster and more efficiently than a general-purpose CPU.

A decade ago, modern AI was born. A team of academic researchers showed that with millions of photos and days of brute force computation, a deep learning model could be trained to identify objects and animals in entirely new images. Today, deep learning has evolved from classifying pictures of cats and dogs to translating languages, detecting tumors in medical scans, and performing thousands of other time-saving tasks.

There’s just one problem. We’re running out of computing power. AI models are growing exponentially, but the hardware to train these behemoths and run them on servers in the cloud or on edge devices like smartphones and sensors hasn’t advanced as quickly. That’s why the IBM Research AI Hardware Center decided to create a specialized computer chip for AI. We’re calling it an Artificial Intelligence Unit, or AIU.

The workhorse of traditional computing — standard chips known as CPUs, or central processing units — were designed before the revolution in deep learning, a form of machine learning that makes predictions based on statistical patterns in big data sets. The flexibility and high precision of CPUs are well suited for general-purpose software applications. But those winning qualities put them at a disadvantage when it comes to training and running deep learning models which require massively parallel AI operations.

A car with a gasoline engine might be able to run on diesel but if maximizing speed and efficiency is the objective, you need the right fuel. The same principle applies to AI. For the last decade, we’ve run deep learning models on CPUs and GPUs — graphics processors designed to render images for video games — when what we really needed was an all-purpose chip optimized for the types of matrix and vector multiplication operations used for deep learning. At IBM, we’ve spent the last five years figuring out how to design a chip customized for the statistics of modern AI.

In our view, there are two main paths to get there.

One, embrace lower precision. An AI chip doesn’t have to be as ultra-precise as a CPU. We’re not calculating trajectories for landing a spacecraft on the moon or estimating the number of hairs on a cat. We’re making predictions and decisions that don’t require anything close to that granular resolution.

With a technique pioneered by IBM called approximate computing, we can drop from 32-bit floating point arithmetic to bit-formats holding a quarter as much information. This simplified format dramatically cuts the amount of number crunching needed to train and run an AI model, without sacrificing accuracy.

Leaner bit formats also reduce another drag on speed: moving data to and from memory. Our AIU uses a range of smaller bit formats, including both floating point and integer representations, to make running an AI model far less memory intensive. We leverage key IBM breakthroughs from the last five years to find the best tradeoff between speed and accuracy.

Two, an AI chip should be laid out to streamline AI workflows. Because most AI calculations involve matrix and vector multiplication, our chip architecture features a simpler layout than a multi-purpose CPU. The IBM AIU has also been designed to send data directly from one compute engine to the next, creating enormous energy savings.

The IBM AIU is what’s known as an application-specific integrated circuit (ASIC). It’s designed for deep learning and can be programmed to run any type of deep-learning task, whether that’s processing spoken language or words and images on a screen. Our complete system-on-chip features 32 processing cores and contains 23 billion transistors — roughly the same number packed into our z16 chip. The IBM AIU is also designed to be as easy-to-use as a graphics card. It can be plugged into any computer or server with a PCIe slot.

This is not a chip we designed entirely from scratch. Rather, it’s the scaled version of an already proven AI accelerator built into our Telum chip. The 32 cores in the IBM AIU closely resemble the AI core embedded in the Telum chip that powers our latest IBM’s z16 system. (Telum uses transistors that are 7 nm in size while our AIU will feature faster, even smaller 5 nm transistors.)

The AI cores built into Telum, and now, our first specialized AI chip, are the product of the AI Hardware Center’s aggressive roadmap to grow IBM’s AI-computing firepower. Because of the time and expense involved in training and running deep-learning models, we’ve barely scratched the surface of what AI can deliver, especially for enterprise.

We launched the AI Hardware Center in 2019 to fill this void, with the mission to improve AI hardware efficiency by 2.5 times each year. By 2029, our goal is train and run AI models one thousand times faster than we could three years ago.

Deploying AI to classify cats and dogs in photos is a fun academic exercise. But it won’t solve the pressing problems we face today. For AI to tackle the complexities of the real world — things like predicting the next Hurricane Ian, or whether we’re heading into a recession — we need enterprise-quality, industrial-scale hardware. Our AIU takes us one step closer. We hope to soon share news about its release.