A benchmark for evaluating conversational RAG

A new IBM Research dataset and benchmark evaluates how well LLMs do at interactive question-answering tasks using retrieval-augmented generation.

Retrieval-augmented generation, or RAG, has become a popular way of using AI to find reliable and verifiable answers to questions quickly. Through a RAG pipeline, large language models can call on databases or other external sources of knowledge to ground their responses on the latest, most relevant information without the expense of retraining the language models themselves.

RAG can help to prevent LLMs from improvising when they don’t have the answer and offering up inaccurate or fabricated facts. As an additional safeguard, RAG lets users check citations and verify that the information they’ve been given is correct. Thanks in part to RAG, LLMs today are astonishingly adept at one-off question-answering tasks. But once a task becomes more complicated, requiring an extensive back-and-forth conversation like you’d have with a real human, LLM performance drops.

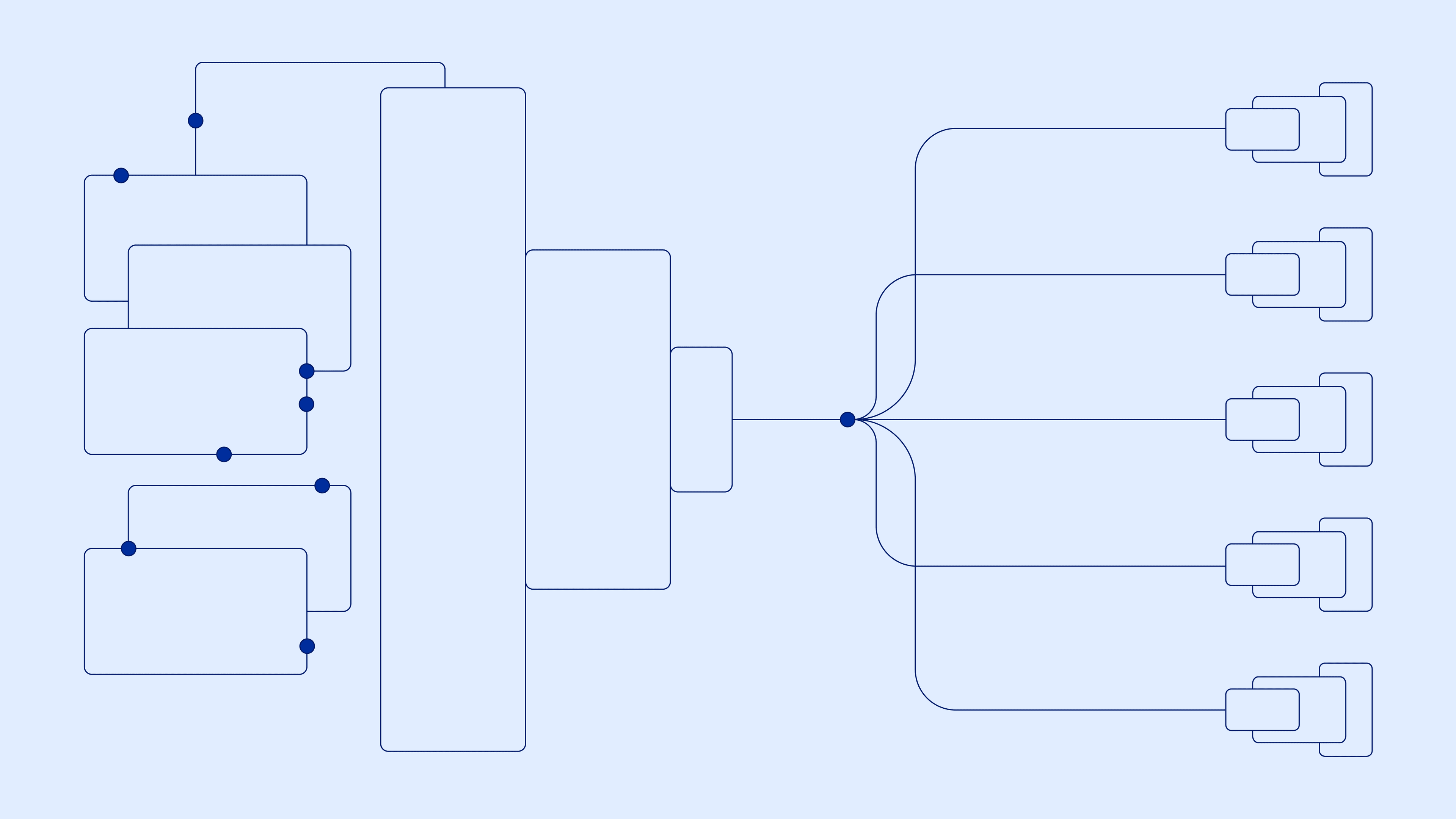

IBM’s new benchmark and dataset, MTRAG, is meant to evaluate LLMs for the ambiguity and unpredictability that marks a live chat with a real person. The dataset consists of 110 extended conversations across four enterprise-relevant domains — finance, general knowledge, IT documentation, and government knowledge. To create it, IBM Research paid skilled human annotators to interact with a live RAG agent, powered by a retriever and an LLM, to fetch and generate content relevant to the question at hand.

Many of the questions in MTRAG were deliberately phrased to be partially or entirely unanswerable. The goal was to see if the agent would ad lib when it ought to ask for clarification or just say, “I don’t know.” The annotators edited the agent’s responses as needed.

Leading LLMs from six companies, including IBM Granite, were tested on the finalized questions and their responses compared to those cleaned up by the annotators. The experiments revealed two trends. Performance for all models fell dramatically on questions that, by design, couldn’t be answered. And all models did better on the first question in a chat than later ones.

“These results confirmed our suspicions,” said Sara Rosenthal, an IBM researcher focused on natural-language processing who co-led the creation of the dataset. “We know that LLMs have a hard time saying ‘I don’t know’ when the answer isn’t in its set of reference passages. We also know they struggle in multi-turn conversation that requires keeping up with what’s been asked and clarified previously. Our results show where LLMs need further training to improve reliability.”

Conversational RAG

IBM’s dataset was designed to closely mimic the flow of natural conversation. Annotators interacted with the live RAG agent through a custom chat application, called Conversational Workbench, designed by IBM to incorporate human feedback. Each annotator received sample conversation starters, or seed examples, to get the chat going. With each conversational turn, annotators evaluated both the passages returned by the retriever and the quality of the LLM’s summarized answers. Responses that contained no mistakes were discarded.

Retrieval was done in real-time to ensure that the latest response in an ongoing conversation reflected information conveyed earlier, to help avoid repetitive or off-topic answers. They also focused on mimicking the kinds of concise but informative responses found in CLAP-NQ, an earlier IBM Research dataset created to add more context to the extractive answers found in datasets like the Stanford Question Answering Dataset (SQuAD).

IBM’s MTRAG dataset covers a wide body of enterprise-related knowledge. Sources included CLAP-NQ mentioned above, a set of StackExchange posts on financial advice, a collection of IBM Cloud documentation that an IT worker might consult, and a collection of government web pages that someone might go to for details on jury duty or trash collection.

“The point was to make conversations that would challenge even the best language models on the market,” said Yannis Katsis, an IBM researcher who co-led MTRAG’s development.

MTRAG can also be evaluated in unitxt, IBM’s open-source framework for preparing data so models can be more easily benchmarked against each other.

RAG’s relevance for LLMs with extended context windows

An LLM’s context window is the maximum amount of text the model can consider as it carries out tasks like chatting with a customer or fixing a line of code. It includes both the text in the user’s prompt and text the model itself has generated. Larger context windows allow the model to hold more text in a kind of working memory, helping it to keep track of key details in a drawn-out chat, or a lengthy document or codebase.

Three years ago, a context window of 4,000 tokens, which include words and parts of words, was the norm. Today, the industry is shifting to context windows of 128,000 tokens and larger, which has led some to predict the end of RAG. Rather than waste an API call to have an LLM retrieve and summarize data through a RAG workflow, you can simply paste the information you want the model to analyze into the context window.

Others insist that RAG will be relevant for some time. Consistent with other studies, the researchers show that LLMs, like people, experience information overload. Stuff too much information into the context window, and performance is likely to suffer. RAG is also still the best way to search a database with millions of documents or to evaluate contradictory information and generate a more accurate response.

What’s next

Researchers plan to add sentence-level citations so that users have less information to scan in a set of retrieved passages to gauge whether an AI-generated response is accurate. They also plan to build out the dataset with new domains of knowledge.

LLMs are commonly fine-tuned, or aligned, on pairs of questions and answers at the end of their training to improve their conversational skills. In addition to testing LLM competency on complex RAG tasks, MTRAG is also a valuable source of alignment data that IBM Research plans to incorporate into a future Granite release.

“The dataset was created to simulate real-world conversation, so we hope it can make our Granite models even more useful to customers” said Rosenthal.

Related posts

- ExplainerKim Martineau

AI for seeing the forest — and the trees

NewsKim MartineauMeet the IBM researchers trying to raise AI’s intelligence-per-watt ratio

Q & AKim MartineauLLMs have model cards. Now, benchmarks do, too

ReleaseKim Martineau