Getting AI to reason: using neuro-symbolic AI for knowledge-based question answering

Building on the foundations of deep learning and symbolic AI, we have developed software that can answer complex questions with minimal domain-specific training. Our initial results are encouraging – the system achieves state-of-the-art accuracy on two datasets with no need for specialized training.

Language is what makes us human. Asking questions is how we learn.

Building on the foundations of deep learning and symbolic AI, we have developed technology that can answer complex questions with minimal domain-specific training. Initial results are very encouraging – the system outperforms current state-of-the-art techniques on two prominent datasets with no need for specialized end-to-end training.

As ‘common sense’ AI matures, it will be possible to use it for better customer support, business intelligence, medical informatics, advanced discovery, and much more.

There are two main innovations behind our results. First, we’ve developed a fundamentally new neuro-symbolic technique called Logical Neural Networks (LNN) where artificial neurons model a notion of weighted real-valued logic.1 By design, LNNs inherit key properties of both neural nets and symbolic logic and can be used with domain knowledge for reasoning.

Next, we’ve used LNNs to create a new system for knowledge-based question answering (KBQA), a task that requires reasoning to answer complex questions. Our system, called Neuro-Symbolic QA (NSQA),2 translates a given natural language question into a logical form and then uses our neuro-symbolic reasoner LNN to reason over a knowledge base to produce the answer.

Our NSQA achieves state-of-the-art accuracy on two prominent KBQA datasets without the need for end-to-end dataset-specific training. Due to the explicit formal use of reasoning, NSQA can also explain how the system arrived at an answer by precisely laying out the steps of reasoning.

What makes LNNs unique?

LNNs are a modification of today’s neural networks so that they become equivalent to a set of logic statements — yet they also retain the original learning capability of a neural network. Standard neurons are modified so that they precisely model operations in With real-valued logic, variables can take on values in a continuous range between 0 and 1, rather than just binary values of ‘true’ or ‘false.’real-valued logic. LNNs are able to model formal logical reasoning by applying a recursive neural computation of truth values that moves both forward and backward (whereas a standard neural network only moves forward). As a result, LNNs are capable of greater understandability, tolerance to incomplete knowledge, and full logical expressivity. Figure 1 illustrates the difference between typical neurons and logical neurons.

Full logical expressivity means that LNNs support an expressive form of logic called first-order logic. This type of logic allows more kinds of knowledge to be represented understandably, with real values allowing representation of uncertainty. Many other approaches only support simpler forms of logic like propositional logic, or Horn clauses, or only approximate the behavior of first-order logic.

LNNs’ form of real-valued logic also enables representation of the strengths of relationships between logical clauses via neural weights, further improving its predictive accuracy.3 Another advantage of LNNs is that they are tolerant to incomplete knowledge. Most AI approaches make a closed-world assumption that if a statement doesn’t appear in the knowledge base, it is false. LNNs, on the other hand, maintain upper and lower bounds for each variable, allowing the more realistic open-world assumption and a robust way to accommodate incomplete knowledge.

Finally, other well-known neuro-symbolic strategies, including techniques based on Markov random fields (such as Markov logic networks), and many others based on embeddings (such as logic tensor networks) are less understandable — due to the use of hard-to-interpret weights, and the fact that they do not have the same kind of language-like structure. They also assume complete world knowledge and do not perform as well on initial experiments testing learning and reasoning.

Neuro-Symbolic Question Answering

There are several flavors of question answering (QA) tasks – text-based QA, context-based QA (in the context of interaction or dialog) or knowledge-based QA (KBQA). We chose to focus on KBQA because such tasks truly demand advanced reasoning such as multi-hop, quantitative, geographic, and temporal reasoning.

With our NSQA approach , it is possible to design a KBQA system with very little or no end-to-end training data. Currently popular end-to-end trained systems, on the other hand, require thousands of question-answer or question-query pairs – which is unrealistic in most enterprise scenarios.

In this work, we approach KBQA with the basic premise that if we can correctly translate the natural language questions into an abstract form that captures the question’s conceptual meaning, we can reason over existing knowledge to answer complex questions. Table 1 illustrates the kinds of questions NSQA can handle and the form of reasoning required to answer different questions. This approach provides interpretability, generalizability, and robustness— all critical requirements in enterprise NLP settings .

| Question Type/Reasoning | Example | Supported |

|---|---|---|

| Simple | Who is the mayor of Paris? | ✓ |

| Multi-relational | Give me all actors starring in movies directed by William Shatner | ✓ |

| Count-based | How many theories did Albert Einstein come up with? | ✓ |

| Superlative | What is the highest mountain in Italy? | ✓ |

| Comparative | Does Breaking Bad have more episodes than Game of Thrones? | |

| Geographic | Was Natalie Portman born in the United States? | ✓ |

| Temporal | When will start [sic] the final match of the football world cup 2018? |

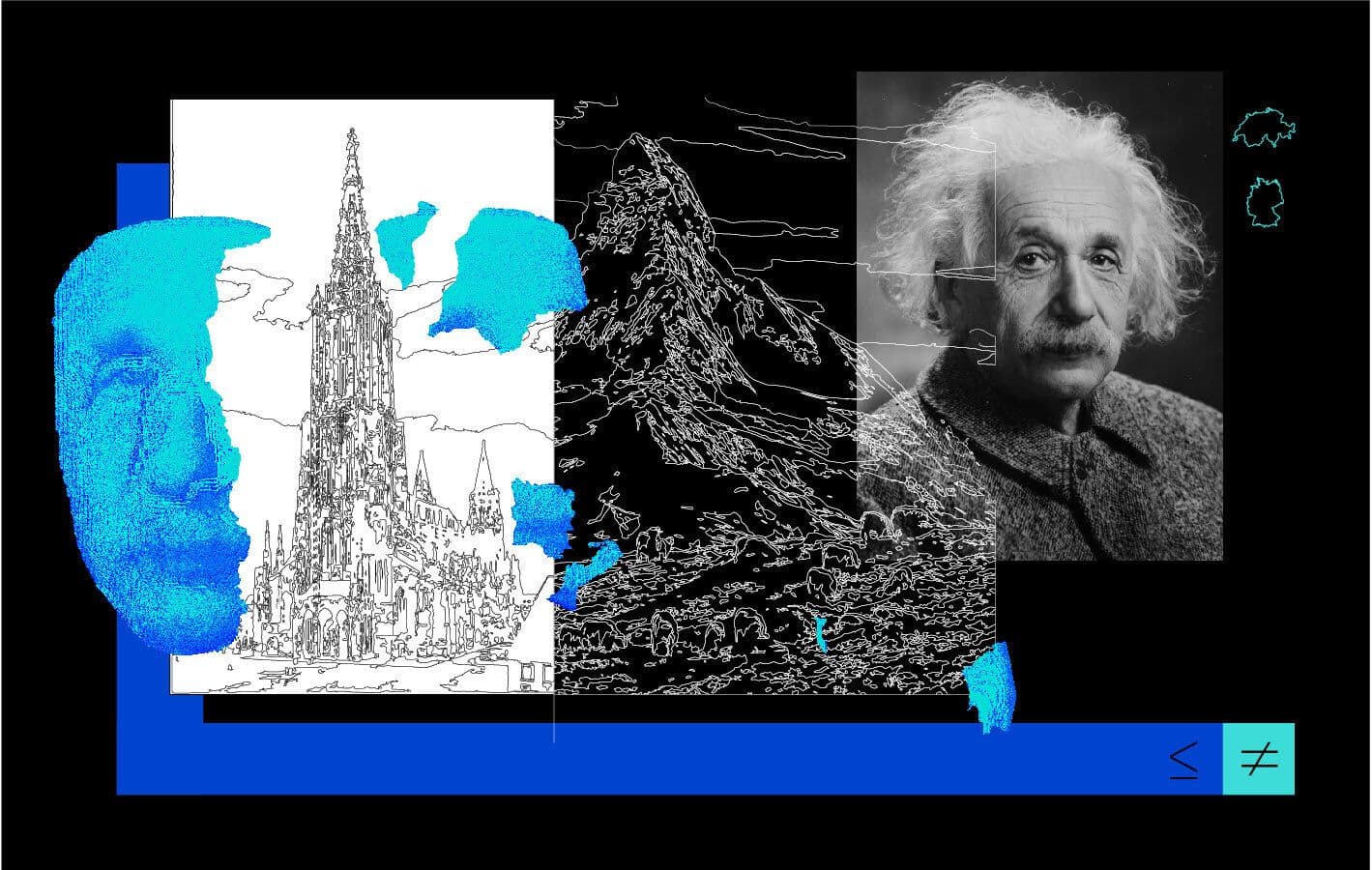

For instance, say NSQA starts with the question: “Was Albert Einstein born in Switzerland?” (see Figure 2). The system first puts the question into a generic logic form by transforming it to an Abstract Meaning Representation (AMR). Each AMR captures the semantics of the question using a vocabulary independent of the knowledge graph – an important quality that allows us to apply the technology independent of the end-task and the underlying knowledge base.4

The AMR is aligned to the terms used in the knowledge graph using entity linking and relation linking modules and is then transformed to a logic representation.5 This logic representation is submitted to the LNN. LNN performs necessary reasoning such as type-based and geographic reasoning to eventually return the answers for the given question. For example, Figure 3 shows the steps of geographic reasoning performed by LNN using manually encoded axioms and DBpedia Knowledge Graph to return an answer.

Experimental results were very promising

We’ve tested the effectiveness of our system on two commonly used KBQA datasets: QALD-9, which has 408 training questions and 150 test questions; and LC-Quad 1.0, which has 5,000 questions.6 We’ve applied NSQA without any end-to-end training for these tasks. As NSQA consists of cutting-edge generic components independent of the underlying knowledge graph (data) — as opposed to other approaches heavily tuned to the data and task at hand — it can generalize to different datasets and tasks without massive training.

We measured the accuracy of our versatile approach using the official metrics of the two benchmark test sets. NSQA achieved a Macro F1 QALD¹ of 45.3 on QALD-9 and an F1 of 38.3 on LC-QuAD — ahead of the current state-of-the-art systems tuned on the respective datasets (43.0 and 33.0).7

Question-answering is the first major use case for the LNN technology we’ve developed. While achieving state-of-the-art performance on the two KBQA datasets is an advance over other AI approaches, these datasets do not display the full range of complexities that our neuro-symbolic approach can address. In particular, the level of reasoning required by these questions is relatively simple.

The next step for us is to tackle successively more difficult question-answering tasks, for example those that test complex temporal reasoning and handling of incompleteness and inconsistencies in knowledge bases.

IBM broke ground with Jeopardy!, showing that question-answering by a machine was possible well beyond the level expected by both AI researchers and the general public – inspiring a tremendous amount of work in question-answering in the field. We hope this work also inspires a next generation of thinking and capabilities in AI.

Learn more about:

Neuro-symbolic AI: By augmenting and combining the strengths of statistical AI, like machine learning, with the capabilities of human-like symbolic knowledge and reasoning, we're aiming to create a revolution in AI, rather than an evolution.

Notes

- Note 1: With real-valued logic, variables can take on values in a continuous range between 0 and 1, rather than just binary values of ‘true’ or ‘false.’ ↩︎

References

-

Riegel, R. et al. Logical Neural Networks. arXiv:2006.13155 [cs] (2020). ↩

-

Kapanipathi, P. et al. Leveraging Abstract Meaning Representation for Knowledge Base Question Answering. arXiv:2012.01707 [cs] (2021). ↩

-

Fagin, R., Riegel, R. & Gray, A. Foundations of Reasoning with Uncertainty via Real-valued Logics. arXiv:2008.02429 [cs] (2021). ↩

-

Lee, Y.-S. et al. Pushing the Limits of AMR Parsing with Self-Learning. arXiv:2010.10673 [cs] (2020). ↩

-

Mihindukulasooriya, N. et al. Leveraging Semantic Parsing for Relation Linking over Knowledge Bases. in The Semantic Web – ISWC 2020 (eds. Pan, J. Z. et al.) 402–419 (Springer International Publishing, 2020). ↩

-

Usbeck, R., Gusmita, R. H., Ngomo, A. N. & Saleem, M. 9th Challenge on Question Answering over Linked Data (QALD-9). in Semdeep/NLIWoD@ISWC (2018). ↩

-

Trivedi, P., Maheshwari, G., Dubey, M. & Lehmann, J. LC-QuAD: A Corpus for Complex Question Answering over Knowledge Graphs. The Semantic Web – ISWC 2017 (eds. d’Amato, C. et al.) 210–218. ↩

Related posts

- Q & APeter Hess

New IBM Research NLP enhancements coming to IBM Watson Discovery

NewsShila Ofek-Koifman, Yunyao Li, and Salim Roukos8 minute readAI, you have a lot of explaining to do

ReleaseDinesh Garg, Parag Singla, Dinesh Khandelwal, Shourya Aggarwal, Divyanshu Mandowara, and Vishwajeet Agrawal5 minute readIBM, MIT and Harvard release “Common Sense AI” dataset at ICML 2021

ReleaseDan Gutfreund, Abhishek Bhandwaldar, and Chuang Gan6 minute read