Revolutionizing geospatial data management

IBM Research's prototype TensorLakehouse is a tool that has the potential to dramatically simplify how we analyze geospatial data.

Our world is increasingly confronted by severe weather events driven by global warming. AI and geospatial data are potentially powerful tools to identify greenhouse gas emission hotspots, monitor climate risks, and propose adaptation strategies, guiding us toward a more sustainable future.

Satellites monitor every part of our planet every day, accumulating an immense data volume of 807 petabytes. Processing such vast amounts of data on a continental or global scale is a formidable challenge. The complexity is further heightened by the diversity in data formats, spatial and temporal resolutions, and Coordinate Reference Systems (CRS) in which raw geospatial and temporal data are provided.

To fully use all of this data, it is essential to align the different types, such as photographic and radar images. This alignment requires tools to adjust resolutions, CRS projections, and integrate raster data with vector data. Once all that data has been harmonzied, querying such a large data lake to extract meaningful information remains a significant task. It often requires reading multiple terabytes of data to identify a few relevant bytes of valuable insights. Additionally, training large-scale AI models demands rapid streaming of data samples to GPU memory to maximize the utilization of costly GPU clusters, especially when data is stored across different data centers.

Embracing these technologies and overcoming these challenges is crucial to keep advancing our understanding and response to climate change. To address these challenges, IBM created TensorLakehouse (TLH). It’s a prototype tool out of IBM Research that integrates advanced techniques such as data federation, database indexing, stride-access optimized data layout, and AI-based query capabilities to streamline the analysis of geospatial data.

Managing data efficiently with TensorLakeHouse

TLH aims to minimize data access whenever possible with the help of indices. When accessing data is necessary, TLH reduces latency and maximizes throughput by optimizing block size and data layout on disk. This approach significantly enhances the efficiency of processing large volumes of high-dimensional data.

Unlike traditional data management systems, which are typically optimized for tabular data, the TLH prototype excels in handling scientific data such as images, climate data, and video, as well as other scientific data types, such as biological sequences, and neuroscience data. It organizes all data in hyperdimensional cubes and allows queries to extract sub-cubes or tensors, which is where the name TensorLakehouse comes from.

A key feature of TLH is its ability to answer queries directly from a Hierarchical Statistical Index (HSI), a technique borrowed from traditional database systems. By storing statistics about the underlying data, the index can determine if it is necessary to read specific blocks of data, effectively skipping raw data access whenever possible. This means that some statistical queries can be answered directly from the index, significantly speeding up data retrieval.

Running on cloud-native infrastructures, TLH could help organizations reduce costs associated with data storage and computation by leveraging cloud object storage. Additionally, TLH supports the CLAIMED framework for massive parallel data ingestion, automatically generating grid computing-based data ingestion pipelines. In testing, this capability has been shown to reduce data ingestion times from 270 days to less than one day in a real production use case.

TLH and foundation models

Recently, foundation models have demonstrated exceptional performance, particularly in the form of large language models (LLMs). In a landmark collaboration with NASA, IBM Research released and open-sourced Prithvi, the first Earth Observation (EO) foundation model. This year, the collaboration expanded to include a foundation model focused on weather and climate.

The groundbreaking capabilities of foundation models, such as label efficiency and the ability to generalize across multiple applications, are heavily reliant on streamlined data access during pre-training. This is where TensorLakehouse (TLH) can provide true value. This is especially true for multi-modal data, since any additional dataset just appears as additional dimension — or layer — in TLH’s hypercube. This concept generalizes to data federation across data centers, making data available under the same open API endpoint, regardless of the physical data source.

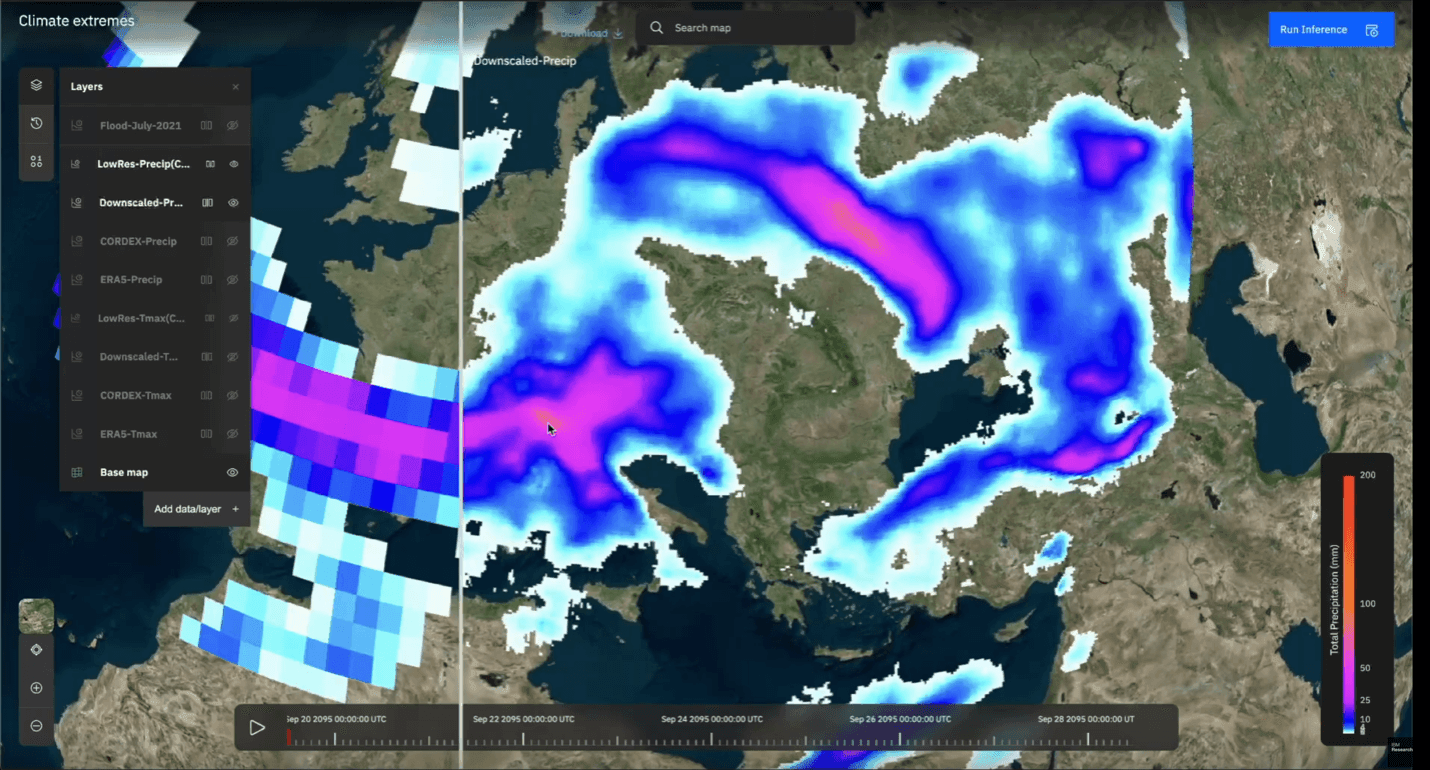

At Think this year, IBM showed off its prototype. It’s a graphical development environment for all fine-tuning and inference tasks, and sits on top of the API layer TLH provides. This way, without any programming skills, researchers can seamlessly apply foundation models on any dataset. If you can use a map application, you can use Geospatial Studio.

While Geospatial Studio is the largest internal user of TLH, the TLH is also powering a major meteorological service provider, Environment and Climate Change Canada, and the UK governmental program, HNCDI.

Why IBM open-sourced TensorLakehouse

IBM believes that open science principles, supported by open standards and open-source code, are crucial accelerators for scientific progress. We see them as key to advance AI for climate and sustainability — something we argued in a recent white paper released by the Swiss Academy of Engineering Sciences (SATW).

IBM is committed to open science. In December 2023, IBM and Meta founded the AI Alliance along with dozens of other companies, research facilities, and academic institutions. It’s a global community of technology creators, developers, and adopters collaborating to advance safe, responsible AI rooted in open innovation. IBM has decided to open-source TLH to further contribute to community building and innovation. Collaborating with organizations like NASA, ESA, and many others, the aim is to create a vibrant ecosystem around TLH, driving forward geospatial research.

Providing a cloud-native reference implementation for the emerging Open Geospatial Consortium (OGC) standards, TLH supports a broad range of research projects. For example, the EU’s Embed2Scale project utilizes TLH for efficient data exchange, federated computing, and similarity search.

As TLH’s open standard and open source-based interfaces (along with comprehensive documentation), make it accessible to everybody. TLH can also be installed on any cloud or on-premise infrastructure setup in a few simple steps.

What’s next in geospatial data management: Embeddings as lingua franca

The language of large-scale foundation models are embeddings generated by encoders. These embeddings represent the semantic information of the raw data and as such, are all an AI encoder needs to perform multiple downstream tasks, like flood detection in satellite images. Accordingly, we’re exploring the exchange of embeddings instead of the raw data between data centers in the EU-funded Embed2Scale project with partners like the German Aerospace Center and the Jülich Supercomputing Center. The project aims at generating compressed embeddings from satellite images to reduce data transfer energy, latency and data storage.

This week, we released our initial work on neural embedding compression and image retrieval based on Earth observation foundation models at the IEEE GARSS conference in Athens. There, we demonstrated specific ways to compress embeddings by neural compression. We’ve been able to reduce transfer latency and storage substantially at minimal drop of data utility. We also discussed content-based image retrieval means by foundation model generated embeddings stored in vector databases for Earth observation images. We’ve demonstrated a mean average precision of 97.4% for the BigEarthNet benchmark classes at high retrieval speeds.

Overall, we expect the exchange of embeddings from foundation models to pick up in the next few years and hopefully democratizing the access to earth observation data also to places with limited bandwidth.

Related posts

- ReleaseMike Murphy

A more fluid way to model time-series data

ResearchKim MartineauFrom simulated steps to real-world care: AI learns how we walk for neurology

ResearchPeter HessRevolutionizing industries with AI-powered digital twins

Case studyPeter Hess