Reinventing data preparation on cloud to scale enterprise AI

IBM Research has been curating a massive, trustworthy dataset on the IBM Cloud to power the roadmap for IBM’s Granite model series that are trained on Vela systems. Innovations in how we scale up our data processing pipeline are helping IBM move faster and produce higher quality Granite models than ever before.

The AI explosion has seen demand for processing power skyrocket. Companies that provide AI infrastructure have become some of the most valuable in the world, and researchers everywhere are looking for ways to make training and running their AI workloads more efficient. But there are other parts of the AI pipeline that are ripe for optimization and innovation — including those that rely on the far more abundant capacity of CPUs.

Before you can begin to train a new AI model, you need training data. Depending on what sort of model you’re training, you may need different kinds of information. For a large language model, you need billions of text documents across various genres. In practice, much of this data comes from websites, PDFs, news articles, along with myriad other sources. However, this data can’t be used in its raw form to train models.

A series of steps are needed to get this data ready, including stripping out any irrelevant HTML code, filtering the text for things like hate and abuse, and checking for duplicate information, among other steps. These steps get automated into a data processing pipeline. “A large part of the time and effort that goes into training these models is preparing the data for these models,” said IBM Research’s Petros Zerfos, “And that work is not bound by availability of GPUs.”

Zerfos, who is IBM Research’s principal research scientist for watsonx data engineering, has been working with his team to find ways to make data processing for AI training more efficient. It’s a big effort that’s required expertise from researchers working in a broad range of domains, including NLP, distributed computing, networking, and storage systems.

Many of the steps in this data processing pipeline have a characteristic referred to as “embarrassingly parallel” computations. This essentially means that any website or document can be processed independently of other documents. By not working sequentially through data, but rather distributing the computation for different parts of the data set onto as many processors as you can get, you can linearly speed up the time it takes to build your dataset. There are some steps in the data processing pipeline that are not embarrassingly parallel computations: For example, removing duplicate documents from the training data is important because repetitive pieces of data can accidentally bias the trained model towards the repeated information. However, finding duplicates of a given document requires accessing all other documents, and this cannot be performed independently of other documents.

Apart from cases where a workflow step itself uses an AI model (such as a classifier to label a document as abusive), most of the data processing steps can be run on CPU processors, and so aren’t bottlenecked by the availability of much more expensive GPU infrastructure.

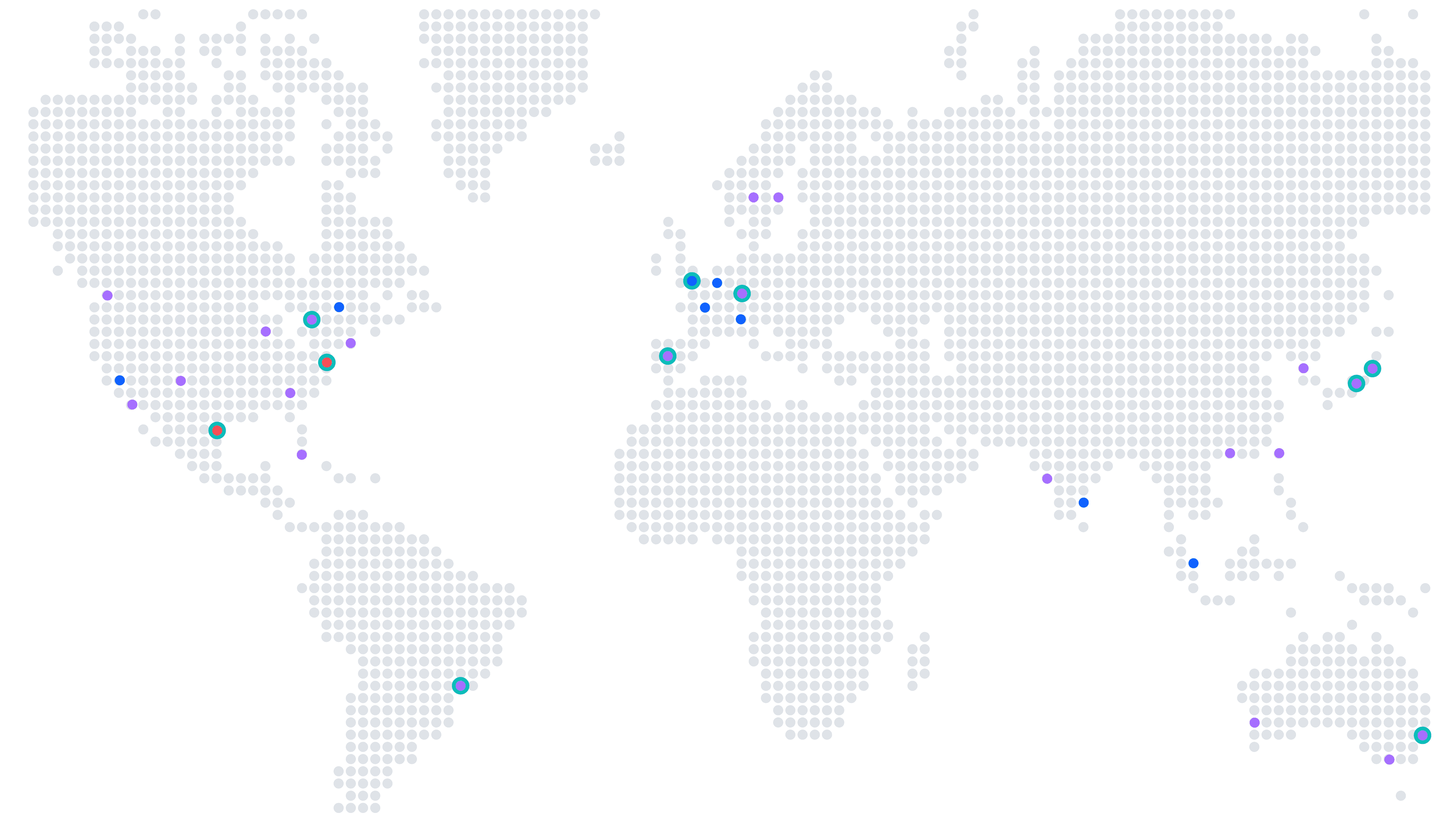

To help accelerate and improve IBM’s Granite model development pipeline, the team built efficient new processes to rapidly provision and use tens of thousands of CPUs, as well as marshal them together to massively scale out the data-curation pipeline. This led to one of the team’s main breakthroughs: They figured out how to provision a massive quantity of idle CPU capacity across the entire IBM Cloud datacenter network using an experimental feature of the IBM Cloud Code Engine. This required figuring out how to have a high communication bandwidth between where the CPUs are located and where the data is stored. Every CPU in use would need to read information from the data source (in this case, Cloud Object Storage), process it, and then write information back. However, traditional Object Storage systems are not designed for high-performance repeated access to large volumes of data. This means that using object storage in such processing pipelines would leave many CPUs idling for extended periods of time.

To solve this problem, the team used IBM’s high-performance Storage Scale file system, which allowed them to transparently cache all active data and interact with it at a much higher bandwidth, and with lower latency. The processed data is then written back to the object storage only after multiple steps of the processing pipeline are completed. This architecture saves repeated read / write iterations between the CPUs and the cloud storage.

Over the last year, the team has been able to scale up to 100,000 vCPUs in the IBM Cloud to produce 40 trillion tokens for training AI models out of 14 petabytes of raw data from web crawls and other sources. They’ve been working on automating the pipelines they’ve built on Kubeflow on IBM Cloud. Zerfos said that the team has found that stripping HTML out of the Common Crawl data and mapping the content into markdown format was 24 times faster using their processes than other methods they have used. All the Granite code and language models that IBM has open-sourced over the last year have been trained on data that went through the team’s data-preparation process.

The team has turned much of their work into a community GitHub project called Data Prep Kit. It’s a toolkit for streamlining data preparation for LLM applications (right now for code and language models) supporting pre-training, fine-tuning, and RAG use cases. The modules in the kit are built on common distributed processing frameworks (including Spark and Ray) that allows the developers to build their own custom modules that can be scaled quickly across a variety of runtimes and readily scale from laptop to data centers.

Related posts

- Technical noteJeffrey Burns

How IBM Granite became a leader in responsible AI

ExplainerKim MartineauAI for seeing the forest — and the trees

NewsKim MartineauMeet the IBM researchers trying to raise AI’s intelligence-per-watt ratio

Q & AKim Martineau