IBM Quantum is working to bring useful quantum computing to the world. We’re using error mitigation techniques to extract value from near-term processors while we lay the groundwork for the fault-tolerant quantum-centric supercomputers of tomorrow.

As we follow that path, we expect to incrementally broaden the scope of applications for which quantum computers provide utility as our processors improve in scale, quality, and speed. Sampling from complicated probability distributions is an application where we foresee quantum computers providing increased value as we approach and enter the era of fault tolerance. This is bolstered by new research1 from our team published in Nature.

For this work, our team set out with three goals. We first wanted to find an algorithm we could run on near-term quantum devices. Second, we wanted that algorithm to provide a provable guarantee of producing the right answer for the problem. And third, we wanted the algorithm to provide value for a use case that people are actually interested in. Those criteria brought us to the problem of selecting random, representative items from a large set, called sampling. Specifically, we’d be sampling the Boltzmann distribution of the classical Ising model.

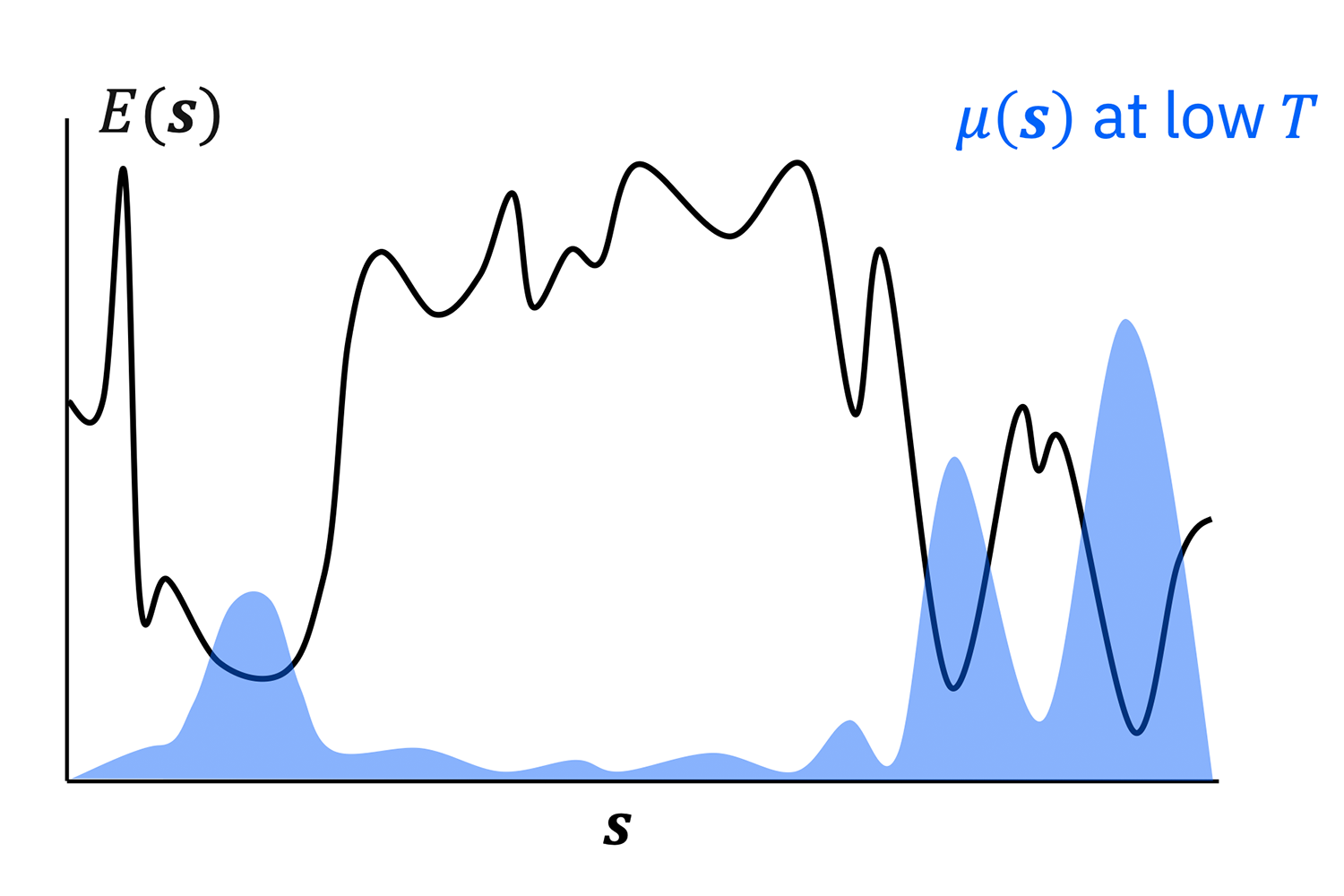

Essentially, the Ising model assigns values, we'll call them energies, to a set of binary numbers, also known as bitstrings. The Boltzmann distribution uses those energy values to calculate a probability for each of the bitstrings, based on a temperature. At low temperatures, the distribution sets high probabilities for bitstrings with low energies.

This might sound easy — we’re just looking for the lowest energy states for some system. But as we try to calculate these probabilities, we’re required to calculate a value called the partition function. The time needed to do so scales exponentially with the size of the bitstrings. So instead, if we want to understand the Boltzmann distribution efficiently, we have to try and create a representative sample. We want our computer to output bitstrings with the same probability we’d expect from the Boltzmann distribution.

It might sound esoteric, but this challenging problem has a variety of important applications. It allows you to calculate the magnetic properties of some materials, for example. It’s also useful for machine learning, specifically, as part of the training process in areas of deep learning. Sampling from this distribution can be a major computational bottleneck.

Today, we generate these random samples using a technique called Markov chain Monte Carlo (MCMC), of which the Metropolis-Hastings algorithm is a famous example. The idea is to calculate the energy of a bitstring, then attempt to jump to some new value. Each time you attempt to jump, you calculate something called the acceptance probability — the odds that you’ll successfully be allowed to jump to the new value, versus the odds that you have to stay put and try again.

Jumping to lower energy values is always allowed, but when you try to jump to higher values, the odds of being accepted decrease based on how high you try to jump. You repeat this process over and over again, jumping only when the acceptance probability allows. Naturally, you’ll start spending more time at certain states and less time at other states; those states where you spend more time are the states with higher probabilities in the Boltzmann distribution.

Quantum for the next, smart jump

So, where can the quantum computer come in? This approach is powerful at finding the correct Boltzmann distribution, but you can imagine it requires a lot of waiting around for your jumps to get accepted — it can get stuck. Quantum allows you to more intelligently choose where to jump next. First, you convert the bitstrings into qubit values. Then, you evolve your qubits based on the properties of the Ising model in your problem, and read out the next step. You use these two bitstrings to calculate the acceptance probabilities with a classical computer, then continue the process back and forth between quantum and classical.

How much faster does the quantum version work? It depends on the problem instance. But based on our simulations for an ideal quantum computer, we calculated an average-case speedup between cubic and quartic, depending on the size of the problem.

Those were just simulations, though. We also ran the algorithm on a 27-qubit IBM Quantum Falcon processor. And indeed, the quantum version did deliver a speedup over classical-only methods, as well as the correct answer, for the specific problems we tried.

The Ising model assigns an energy E(s) to every bitstring, s. The resulting Boltzmann distribution then assigns probabilities μ(s) based on these energies, and on a temperature T.

The quantum version did deliver a speedup over classical-only methods, as well as the correct answer, for the specific problems we tried.

We’re not reaping advantages from quantum computers running this algorithm just yet. However, we feel confident that it’ll provide gradually more value as our processors keep improving. Since the quantum computer is solely helping us pick the next step in the jump, the algorithm can still work if the quantum computer has an error every once in a while — it’ll just take longer. The quantum algorithm provides more of a speedup when there’s less noise, and becomes more like a classical method in the presence of more noise. But the final answer will eventually be correct, regardless.

This work demonstrates how we hope the future of quantum computing will play out. Of course, our ultimate goal is a fault-tolerant, universal quantum computer capable of solving a variety of problems. But as we work toward that goal, we can continue looking for useful speedups along the way. Our quantum Markov chain Monte Carlo is a perfect example of this. Even with a noisy quantum computer, it can deliver the correct answer. And as the scale, quality, and speed of our processors increase, so too will the speed at which they can solve this problem.

Quantum-enhanced Markov chain Monte Carlo with David Layden | Qiskit Seminar Series

Quantum-enhanced Markov chain Monte Carlo with David Layden