On August 8, 2021, IBM Quantum is retiring its square, and bow-tie (triangular) topologies in favor of the heavy-hex lattice.

Overview

As of August 8, 2021, the topology of all active IBM Quantum devices will be based around the heavy-hex lattice. The heavy-hex lattice represents the fourth iteration of the topology for IBM Quantum systems and is the basis for the Falcon and Hummingbird quantum processor architectures. Each unit cell of the lattice consists of a hexagonal arrangement of qubits, with an additional qubit on each edge.

The heavy-hex topology is a product of co-design between experiment, theory, and applications, that is scalable and offers reduced error-rates while affording the opportunity to explore error correcting codes. Based on lessons learned from earlier systems, the heavy-hex topology represents a slight reduction in qubit connectivity from previous generation systems, but, crucially, minimizes both qubit frequency collisions and spectator qubit errors that are detrimental to real-world quantum application performance.

In this tech report, we discuss the considerations needed when choosing the architecture for a quantum computer. Based on proven fidelity improvements and manufacturing scalability, we believe that the heavy hex lattice is superior to a square lattice in offering a clear path to quantum advantage, from enabling more accurate near-term experimentation to reaching the critical goal of demonstrating fault tolerant error correction. We demonstrate that the heavy-hex lattice is equivalent to the square lattice up to a constant overhead, and like other constant overheads such as choice of gate set, this cost is insignificant compared to the cost of mapping the problem itself.

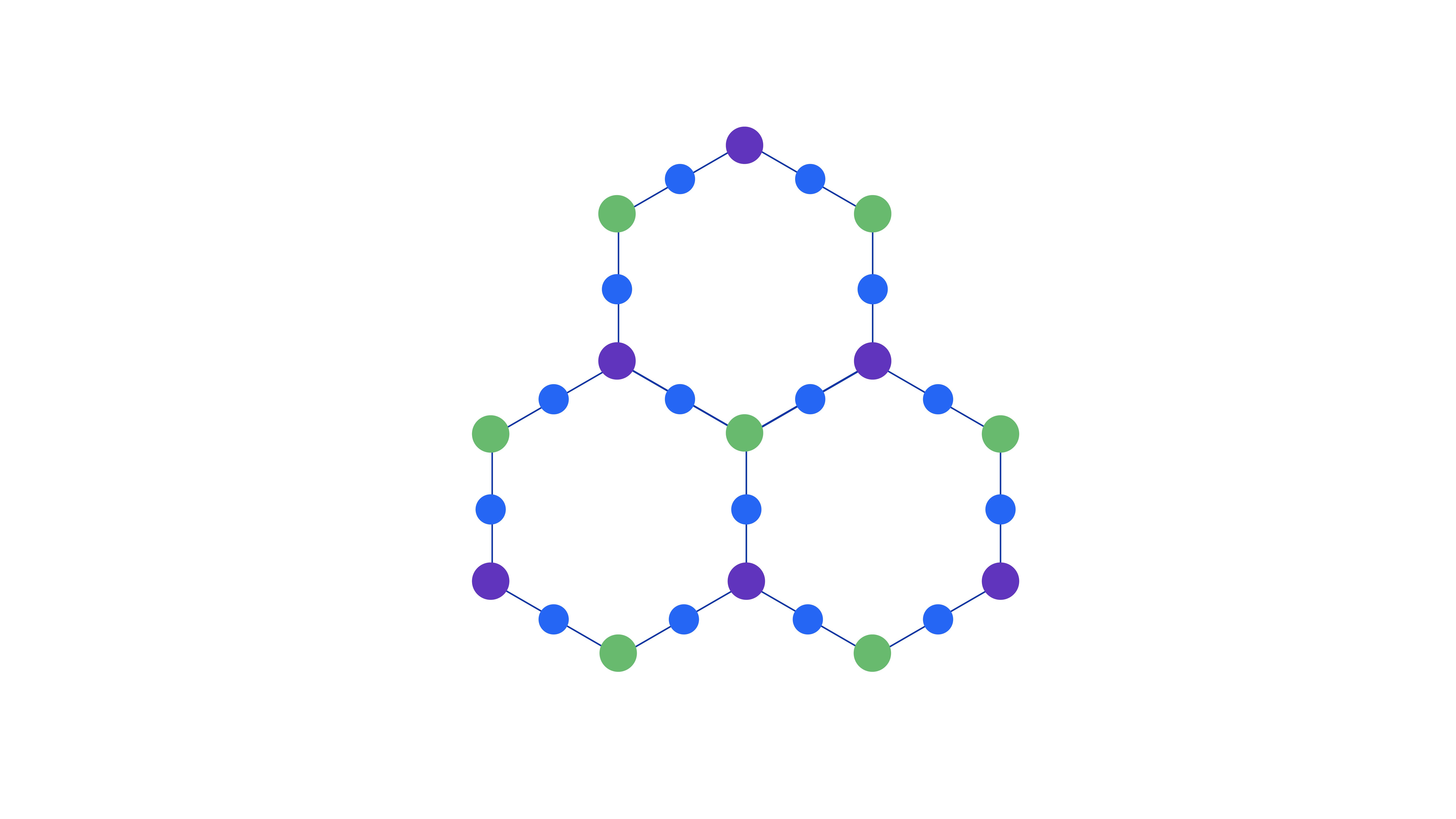

Figure 1: Three unit cells of the heavy-hex lattice. Colors indicate the pattern of three distinct frequencies for control (dark blue) and two sets of target qubits (green and purple).

Physical motivations

IBM Quantum systems make use of fixed-frequency qubits, where the characteristic properties of the qubits are set at the time of fabrication. The two-qubit entangling gate in such systems is the cross-resonance (CR) gate, where the control qubit is driven at the target qubit’s resonance frequency. See Fig. 1 for the layout of control and target qubits in the heavy-hex lattice. These frequencies must be off-resonant with neighboring qubit transition frequencies to prevent undesired interactions call “frequency collisions.”

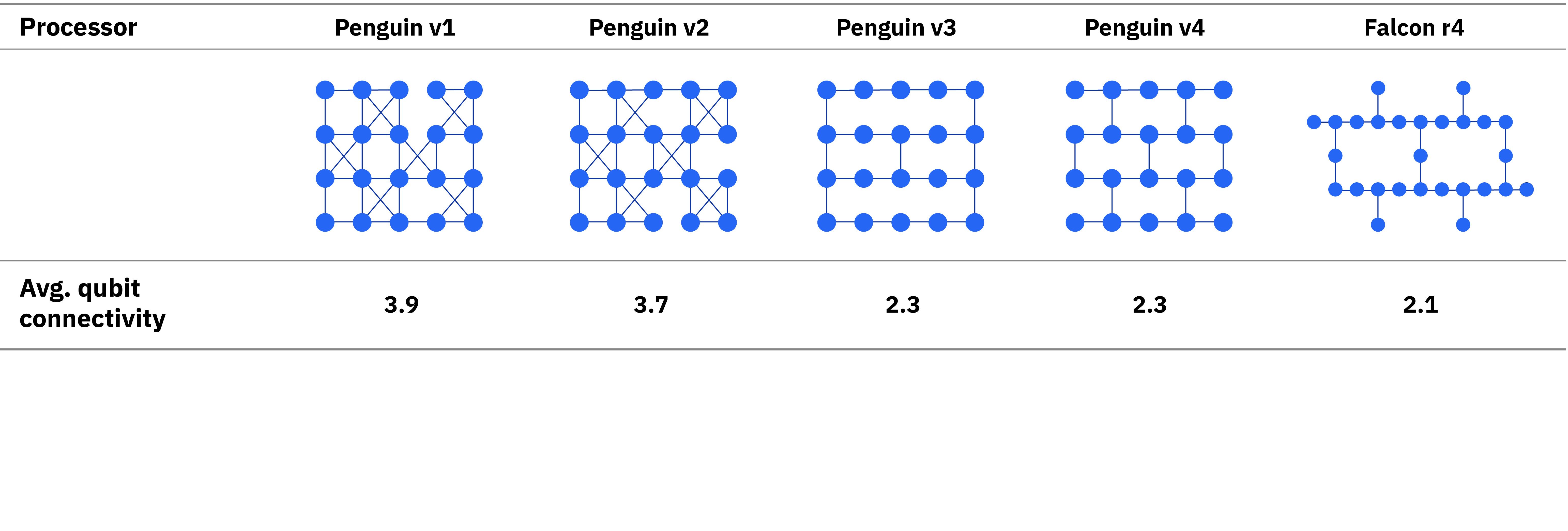

The larger the qubit connectivity, the more frequency conditions must be satisfied, and degeneracies amongst transition frequencies become more likely. In addition, due to fabrication imperfections, require disabling an edge in the system connectivity (e.g. see Penguin v1 and v2 in Fig. 2)—all of which can effect device performance and add hardware overhead.

Figure 2: Left to right, evolution of the topologies for IBM Quantum systems, including the average qubit connectivity.

A similar set of frequency collisions appears in flux-tunable qubits as avoided-crossings in implementing flux control.1 Moreover, tunable qubits come at the cost of introducing flux noise which will reduce coherence, and the flux control adds scaling challenges to larger architectures with increased operational complexity in qubit tune-up and decreased gate fidelity caused by pulse distortions along the flux line.2

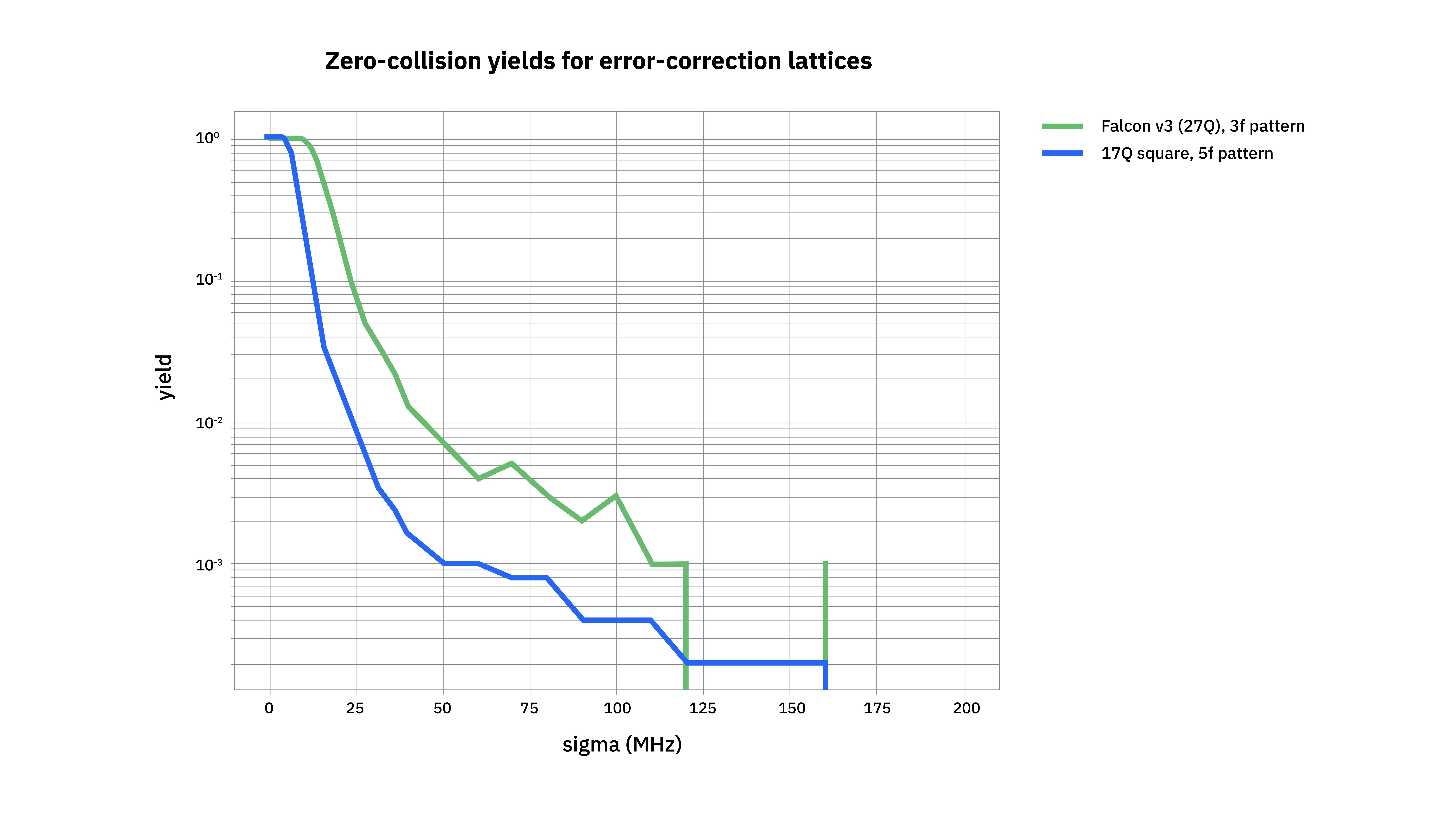

As shown in Fig. 3, the decrease in qubit connectivity offered by the heavy-hex lattice, as well as the selected pattern of control and target qubit frequencies, gives an order of magnitude increase3 in zero-frequency collision yield as compared to other choices for system topology.

Figure 3: Simulations of system yields for collision free devices for heavy-hex and square topologies as a function of qubit frequency variability.

The sparsity of the heavy hex topology with fixed frequency qubits also improves overall gate fidelity4 by limiting spectator qubit errors: errors generated by qubits that are not directly participating in a given two-qubit gate operation. These errors can degrade system performance and do not present themselves when the gate is performed in isolation; one- and two-qubit benchmarking techniques are not sensitive to these errors.

However, the spectator errors matter severely when we run circuits. The rate of spectator errors is directly related to the system connectivity. The heavy-hex connectivity reduces the occurrence of these spectator errors by placing the control qubit on only those edges connected to the target qubits (Figure 1).

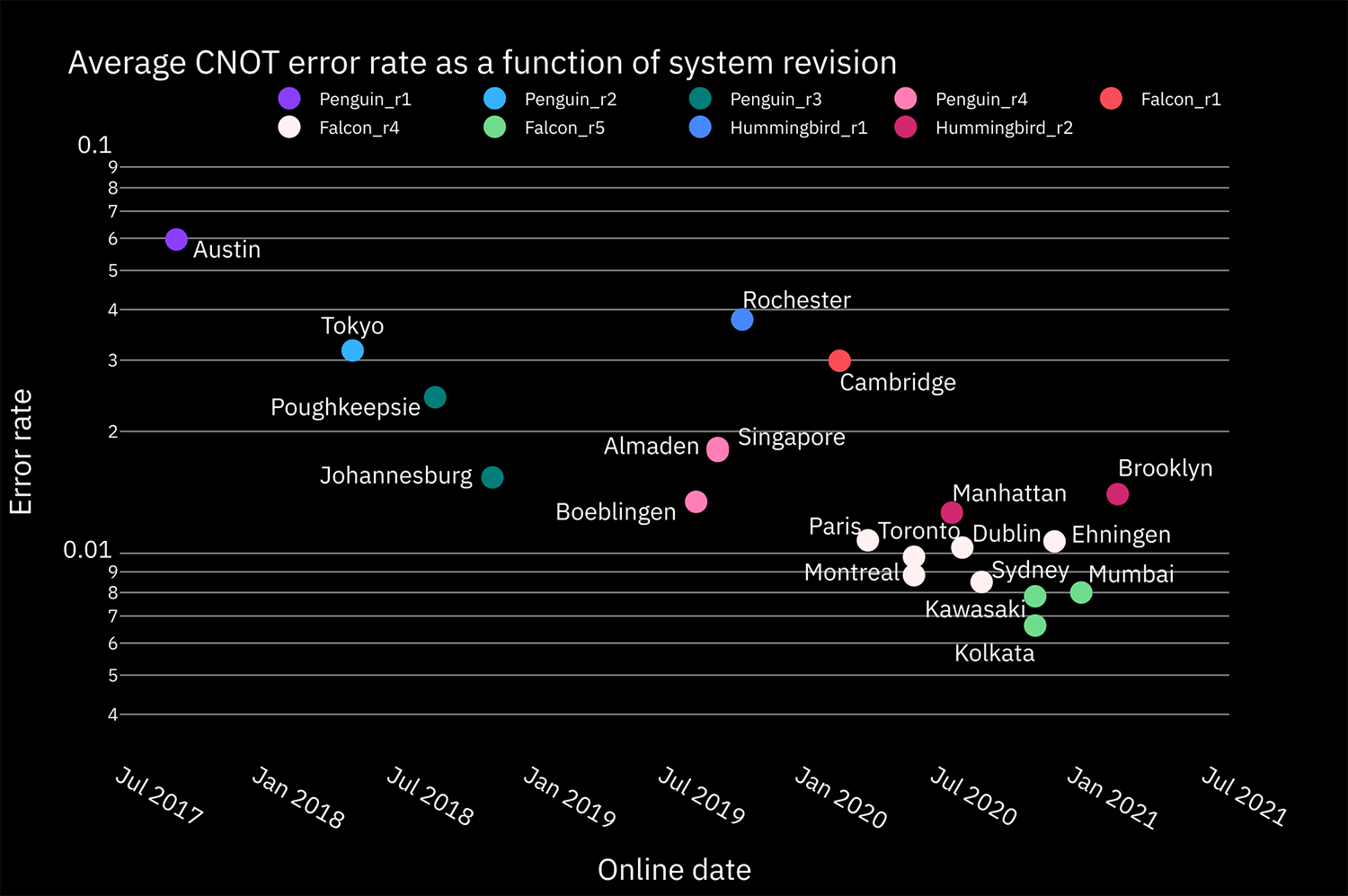

Figure 4 shows the average CNOT error rates for four generations of Penguin quantum processor along with those of the Falcon and Hummingbird families that utilize the heavy-hex topology. The reduction in frequency collisions and spectator errors allow for devices to have better than 1 percent average CNOT error rate across the device, and isolated two-qubit gates approaching 0.5 percent. Additional techniques for improving spectator errors are given in Ref.5 This represents a factor-of-three decrease compared to the error rates on the best Penguin device with a square layout.

Higher Quantum Volume, higher computing performance

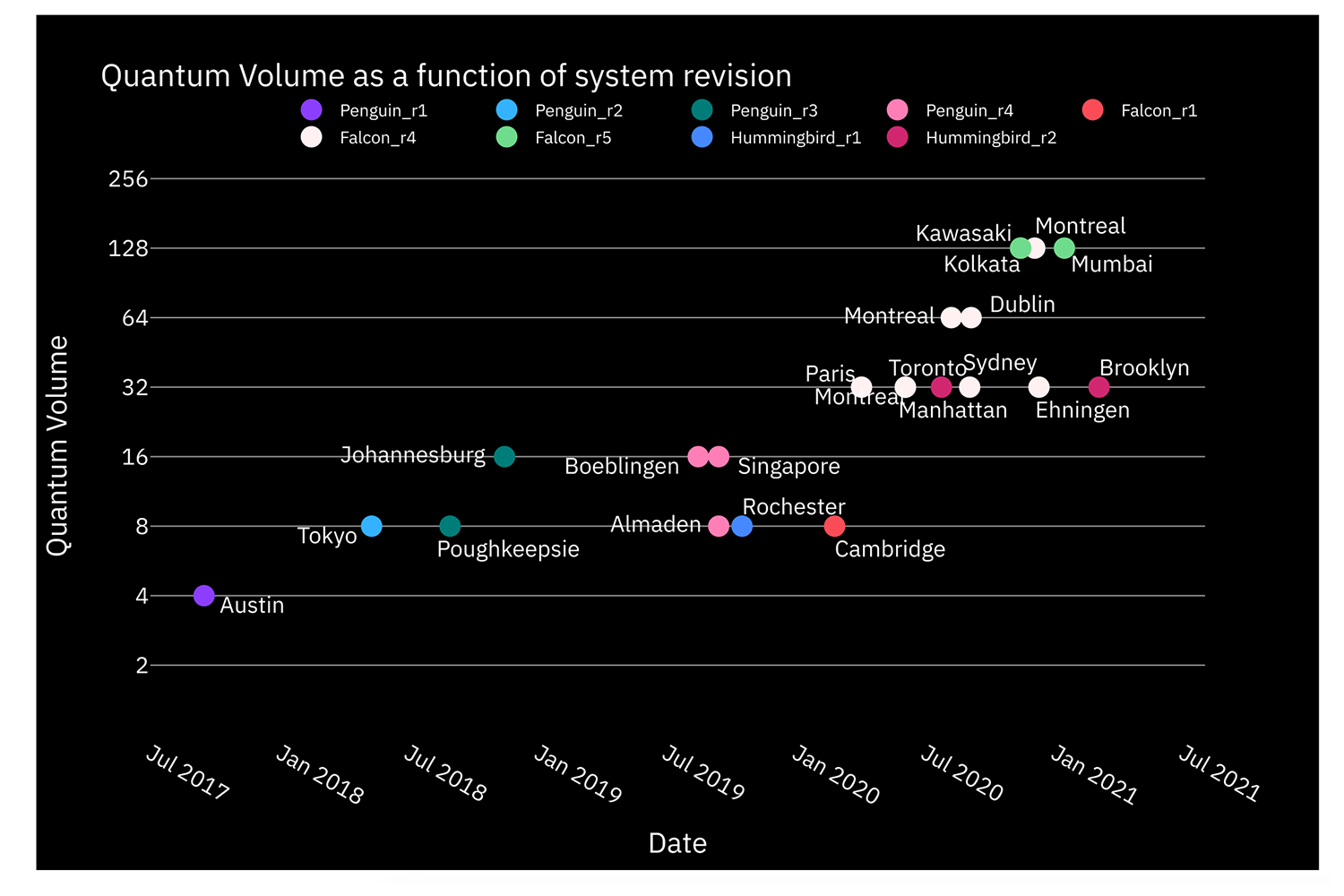

Quantum Volume (QV) is a holistic, hardware-agnostic quantum system benchmark that encapsulates system properties such as the number of qubits, connectivity, as well as gate, spectator errors, and measurement errors into a single numerical value by finding the largest square circuit that a quantum device can reliably calculate.6

Figure 4: Average CNOT gate error rates for Penguin-, Falcon-, and Hummingbird-based IBM Quantum systems.

Higher quantum volumes directly equate to higher processor performance. Gate errors measured by single- or two-qubit benchmarking do not reveal all errors in a circuit, for example crosstalk and spectator errors, and estimating circuit errors from the gate errors is non-trivial. In contrast, QV readily incorporates all possible sources of noise in a system, and measures how good the system is at implementing average quantum circuits. This allows one to find the best system to run their application.

Figure 5 shows the evolution of Quantum Volume over IBM Quantum systems, demonstrating that only heavy-hex based Falcon and Hummingbird systems can achieve QV32 or higher. Parallel improvements in gate design, qubit readout, and control software, such as those in Ref.,5 also play an important role in increasing QV values faster than the anticipated yearly doubling.

Figure 5: Quantum Volume as a function of system release date for IBM Quantum Penguin (20 qubits), Falcon (27 qubits), and Hummingbird (65 qubits) systems. The Falcon and Hummingbird are based on the heavy-hex topology.

Development of quantum error correcting codes is one of the primary areas of research as gate errors begin to approach fault-tolerant thresholds. The surface code, implemented on a square grid topology, is one such example of this. However as already discussed, and experimentally verified,7 frequency collisions are common in fixed-frequency qubit systems with square planar layouts. As such, researchers at IBM Quantum developed a new family of hybrid surface and Bacon-Shor subsystem codes that are naturally implemented on the heavy-hex lattice.8

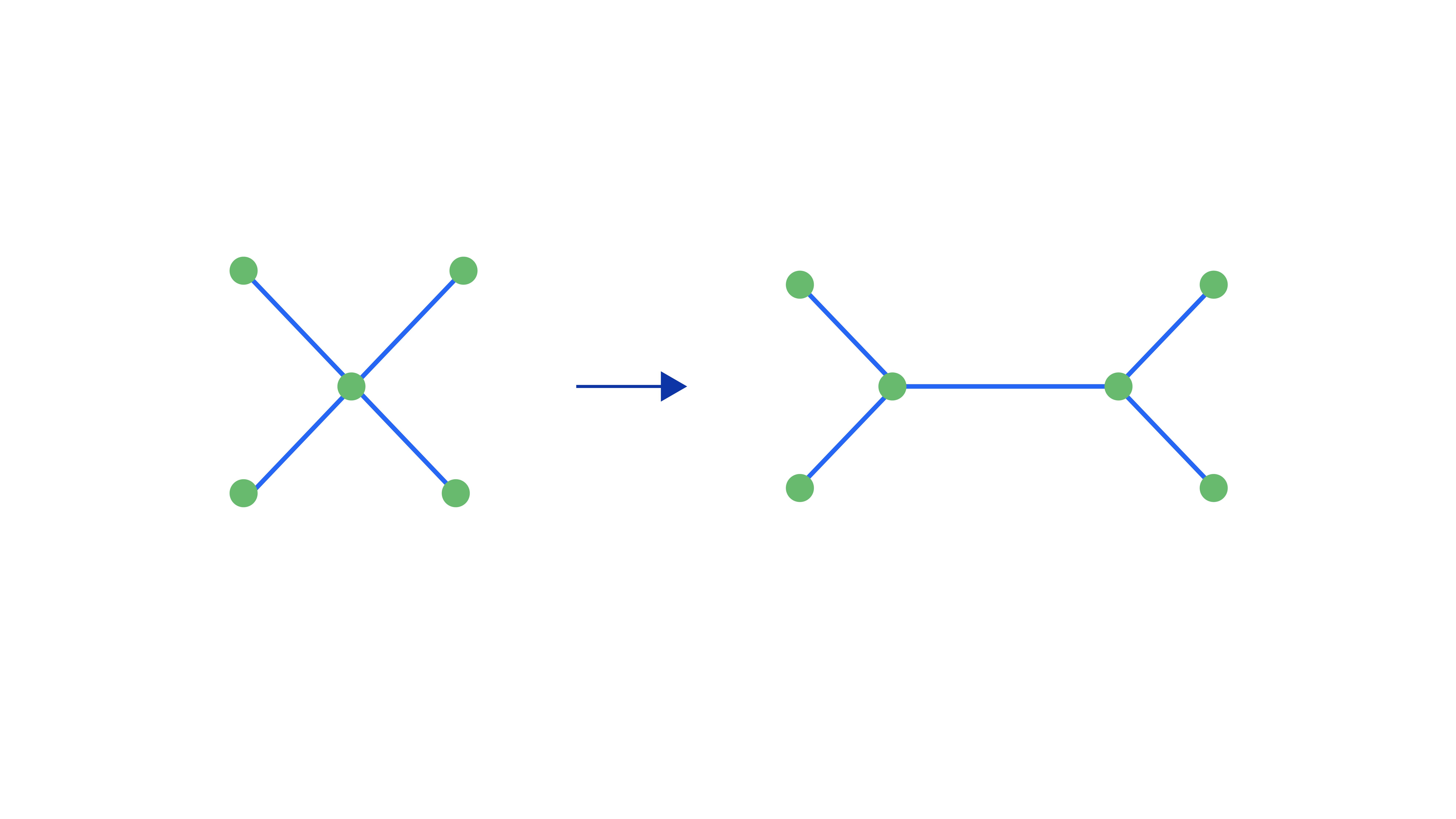

Similar to the surface code, the heavy-hex code also requires a four-body syndrome measurement. However, the heavy-hex code reduces the connectivity by implementing a degree four node with two degree three nodes as presented in Fig. 6.

Figure 6: Reduction of a degree four node into two degree three nodes compatible with the heavy-hex lattice.

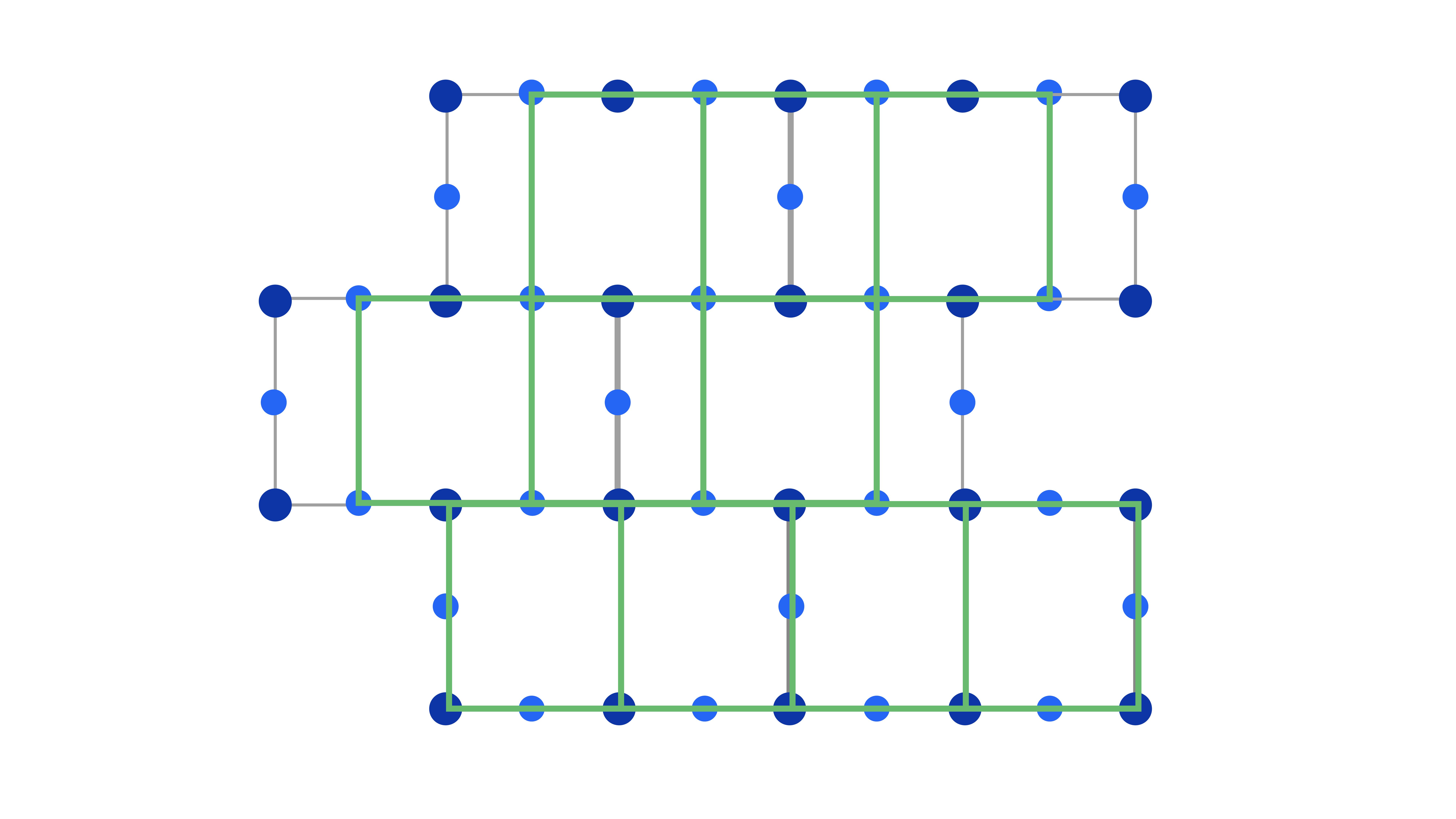

Mapping heavy-hex to square lattices

The connectivity of other lattices, such as the square lattice, can be simulated on the heavy-hex lattice with constant overhead by introducing swap operations within a suitably chosen unit cell. The vertices of the desired virtual lattice can be associated to subsets of vertices in the heavy-hex lattice such that nearest-neighbor gates in the virtual lattice can be simulated with additional two-qubit gates.

Taking the square lattice as an example, there are a variety of ways to associate a unit cell of the heavy-hex lattice to the square lattice. If we draw the hexagons as 3x5 rectangles, one natural choice places the qubits of the square lattice on the horizontal edges of the heavy-hex lattice, see Figure 7.

Figure 7: Direct mapping between heavy-hex and square lattices.

Let's choose the goal of applying an arbitrary two-qubit gate, U(4), between each neighboring qubit in the virtual lattice. This can be accomplished with a constant overhead in depth 14, of which eight steps involve only swap gates. Each qubit individually participates in six swap gates. Alternatively, these swaps might be replaced by teleported gates, at potentially lower constant cost, if the desired interactions correspond to Clifford gates.

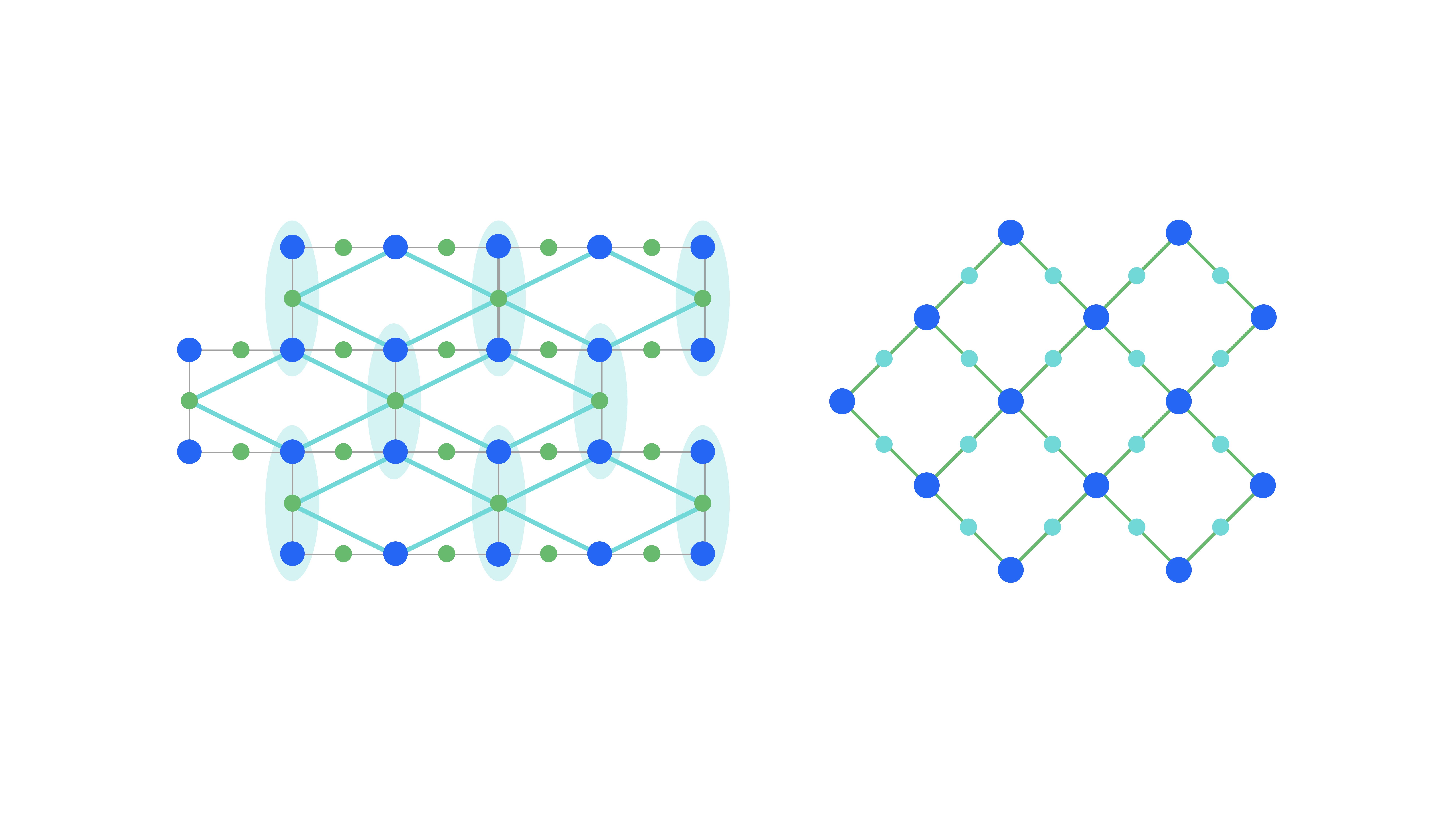

Other mappings exist as well and expose new possibilities for tradeoffs and optimizations. For example, an interesting alternative mapping encodes the qubits of the square lattice into 3-qubit repetition codes on the left and right edges of each 3x5 rectangle (Figure 8, Left). This creates an effective heavy-square lattice where encoded qubits are separated by single auxiliary qubit (Figure 8, Right). In this encoding we can apply diagonal interactions in parallel along the vertical or horizontal direction of the heavy-square lattice. Since swaps occur in parallel between these two rounds of interactions, the total depth is only two rounds of swaps and two rounds of diagonal gates.

There are relatively simple circuits for applying single-qubit gates to the repetition code qubits whose cost is roughly equivalent to a swap gate. Since none of these operations is necessarily fault-tolerant, the error rate will increase by as much as a factor of three, but post-selection can be done for phase flip errors while one takes advantage of the gains from fact that the code itself corrects a single bit flip error. As mentioned, the cost of the encodings described above is a constant and is thus on equal footing with other constant overheads such as the choice of gate set used. These should be compared with the cost of mapping the problem itself to the quantum computer, which might have a polynomial overhead.

Figure 8: Encoding into the 3-qubits repetition code (left) leads to a logical heavy square lattice (right).

Conclusion

We have demonstrated why the IBM Quantum heavy-hex lattice is a promising platform for quantum computation, both today and tomorrow. This topology has proven its worth, both in terms of scalability and performance by improving device error rates. These improvements are captured in system benchmarks such as Quantum Volume that continue to increase at an exponential rate. The connectivity of the heavy-hex lattice can be mapped onto other canonical lattices such as the square lattice with constant cost overhead; it is negligible compared to encoding costs.

Additionally, improvements in gate errors offered by the heavy-hex lattice will significantly offset the overhead from additional mapping gates. We are confident that this platform will allow continuing improvements in both device fidelity and size and will beat square lattices in the race to quantum advantage.