Cars “see around corners” in EU’s CLASS project

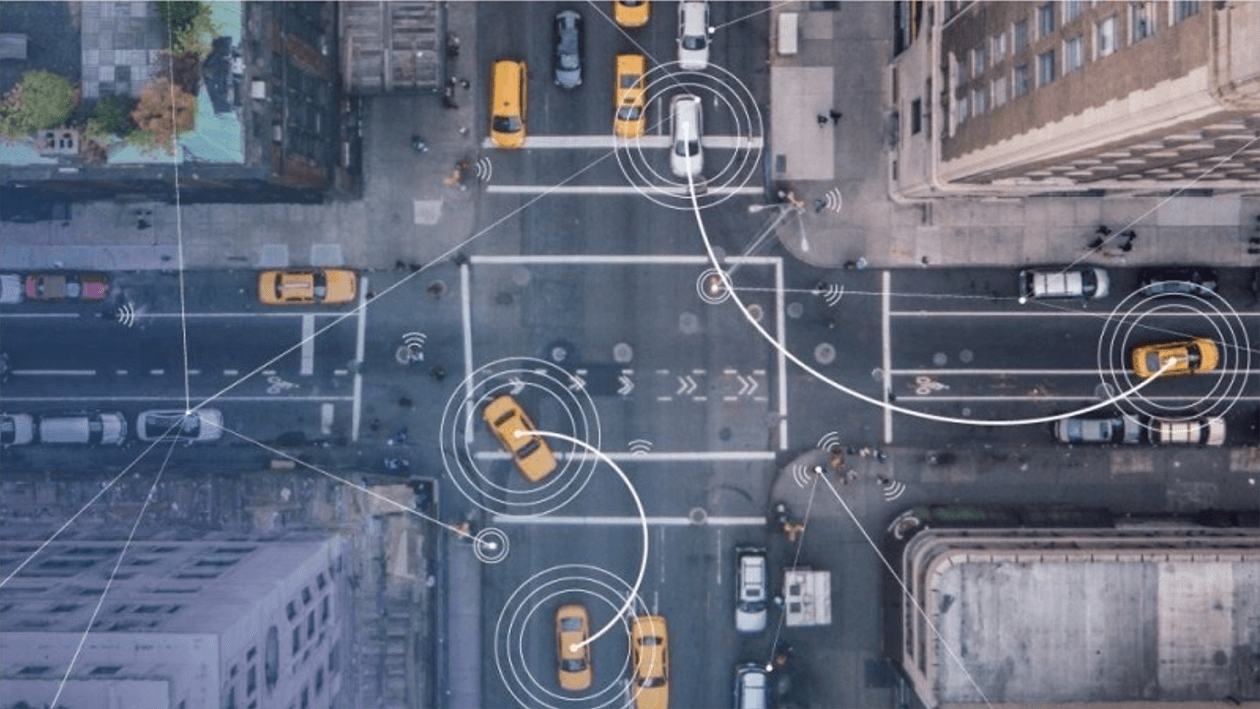

Traffic-heavy area of Modena, Italy used as urban lab to develop sensor tech that sees through nearby vehicles, around corners, behind buildings to avoid collisions.

Traffic-heavy area of Modena, Italy used as urban lab to develop sensor tech that sees through nearby vehicles, around corners, behind buildings to avoid collisions.

It wasn’t too long ago that power steering and hands-free calling were extras in a car. But there’s one feature that’s still lagging: automatic, real-time safety and collision-avoidance sensing while driving.

Enter the EU CLASS research project.

Led by Spain’s Barcelona Supercomputing Center (BSC), the project ran from 2018 until June of this year. Together with the UNIMORE University of Modena and Reggio Emilia in Italy, the city of Modena, Atos, carmaker Maserati and our IBM Research team in Haifa, Israel, the project has turned a traffic-heavy area of Modena into an urban laboratory.

We’ve shown that it’s possible to equip a city and vehicles with sensors in such a way to allow vehicles to “know” what’s behind nearby objects and to “see” around corners—helping drivers to avoid collisions and improve the flow of traffic flowing.

Today’s car sensor systems are limited. They can’t “see” through a building or through a truck in the next lane. And drivers exiting parking spaces, even in a car equipped with all the latest bells and whistles, still might not see an approaching vehicle or person if they are blocked by other obstacles in adjacent spaces. Similarly, cars aren’t adequately equipped to quickly respond if, say, a child jumps out from behind a parked car. No existing sensor can give a car (or its driver) an early warning for that.

Newer cars are usually equipped with an extensive, typically cellular-based internet connection. Many streets in most of the world’s larger cities are regularly monitored by cameras and other sensors belonging to the local municipality. If we could share information from those street sensors with vehicles driving on adjacent roads, cars would be able to “sense” oncoming dangers from different perspectives and different origins, in addition to what they can see and sense on their current driving path.

Cars with sensors and connectivity driving on streets in opposite directions would then become a source of information in areas not covered by the municipality.

That’s exactly what CLASS set out to do.

As part of the project, we developed an experimental prototype of a system that integrates edge computing with cloud infrastructures, gathering multiple information sources from the municipality and other vehicles to be cross-checked in real time.

We created an infrastructure that combines cloud and edge computing infrastructures for big data analysis, with significant emphasis on response times.

A response time is the amount of time that passes from the moment data enters a system until an associated insight or possible outcome stemming from that data is delivered to its destination. Specifically, we sought to reduce car accidents by significantly increasing cars’ sensing capacities, even for events taking place beyond the drivers’ and the sensors’ field of view or monitoring.

First, we equipped the streets with advanced sensors that connected to the city’s main data center through an underground optical network infrastructure. The data center effectively became the project’s “cloud.” We then outfitted an experimental Maserati Quattroporte sedan and Levante SUV with a suite of sensors as well, including HD cameras, LIDARs and GPS—all connected to the city's infrastructure with a dedicated LTE cell.

We also retrofitted the two cars with an embedded Nvidia Jetson GPU and a laptop that served as a mock-up console for the onboard Advanced Driver Assistance System (ADAS) that would allow the driver to receive CLASS notifications from the city’s data center. ADAS sensors made CLASS compatible with any “category 2” or higher vehicle, as are the CLASS test cars and most modern cars. CLASS provided the added capability of automated sensing of imminent but unseen dangers with the help of information sharing. This same sensing capability could later be used as a source of information for a fully autonomous vehicle (categories 5 and 6) as a safety differentiator.

During CLASS experiments, the Quattroporte and Levante roamed Modena’s streets, sending sensor data to the city. Potential alerts were then presented to the in-vehicle laptop that served as an ADAS mock-up. In the future, we imagine these warning capabilities to be embedded in any ADAS device—a physical one built into a car or any smartphone car app, and coupled with navigation or other multimedia.

For Maserati’s professional test drivers, all these protocols and components amounted to a single new channel of notifications that appeared on the ADAS console. The notifications alerted the drivers of possible obstacles and collisions well before they might have happened—sometimes well before they could even be seen—giving them enough time to respond.

The key to CLASS’s operation was integration of real-time vehicle-to-cloud information from city cameras and sensors on vehicles traveling nearby. Data processing included object detection captured by the sensors (video, LIDAR, and radar), analysis of future trajectories of traffic objects, and forecasts of potential accidents based on those trajectories.

Predicting trajectories and collisions simultaneously and efficiently was performed by our Lithops engine, which was adapted during the project for fast processing of edge-to-cloud data with low overhead, over a serverless infrastructure.

Scheduling the overall workflow while considering time constraints, as well as sharing information from edge devices to the cloud, was carried out with COMPS and dataClay tools from BSC. Converting video input to a symbolic stream of objects was performed by UNIMORE using a high-speed deep neural network such as YOLO, while boosting mobile GPU performance beyond default specifications.

For CLASS performance evaluations, we also contributed a first-ever comprehensive benchmark tool for Apache OpenWhisk, called owperf, with specific coverage of event processing. CLASS further initiated a new direction for serverless computation in a framework called EXPRESS, which is initially used for low-overhead execution.

The CLASS project ended in June 2021, but we’re just beginning to analyse the results. The collision avoidance application could be integrated with V2X products and offerings. Core software assets developed during the project could be used for new applications, offerings, and other EU projects, and have already been contributed to other open-source initiatives. For IBM Research, those include a set of novel latency-optimized scalable compute tools and engines, such as a low-latency Lithops engine, EXPRESS framework for low-latency serverless execution, and owperf benchmark tool.

The urban lab set up in Modena is already being used by several other EU projects. Its infrastructure is constantly evolving, with additional support from the Italian Ministry of Transportation.

Above all, CLASS has demonstrated the ability to connect a city's traffic dots: helping complex modern information technology deliver direct value to drivers to city managers, paving the way to smart cities of the future.

The CLASS project received funding from the European Union’s Horizon 2020 research and innovation program under the grant agreement No. 780622.